Businesses today want to enhance the data stored in their relational databases and incorporate up-to-the-minute predictions from machine learning (ML) models. However, most ML processing is done offline in separate systems, resulting in delays in receiving ML inferences for use in applications. AWS wants to make it efficient to incorporate real-time model inferences in your applications without any ML training.

We use two AWS services in this post. Amazon Aurora is a relational database management system (RDBMS) built for the cloud with MySQL and PostgreSQL compatibility. Aurora gives you the performance and availability of commercial-grade databases at one-tenth the cost.

Amazon SageMaker Autopilot is an automated machine learning (AutoML) solution that performs all the tasks you need to complete an end-to-end ML workflow. It explores and prepares your data, applies different algorithms to generate a model, and transparently provides model insights and explainability reports to help you interpret the results. Autopilot can also create a real-time endpoint for online inference. We can access Autopilot’s one-click features in Amazon SageMaker Studio or by using APIs, SDKs, or a command line interface.

Amazon Aurora machine learning (Aurora ML) is a feature of Aurora that enables you to add ML-based predictions to applications via the familiar SQL programming language without prior ML experience. It provides simple, optimized, and secure integration between Aurora and AWS ML services without having to build custom integrations or move data around. Aurora ML provides a faster and easier way to enable ML services to work with data in your Aurora database. Because Aurora makes direct calls to Amazon SageMaker, Aurora ML is suitable for low-latency, real-time use cases such as fraud detection, ad targeting, and product recommendations, where ML-based predictions need to be made quickly on large amounts of data.

In Part 1 of this series, we show you how you can build a customer churn ML model with Autopilot to generate accurate ML predictions on your own—without requiring any ML experience. We will show you how to invoke a SageMaker endpoint to predict customer churn using familiar SQL statements from Aurora cluster. These predictions can now be accessed with SQL just like any other data stored in Aurora. In Part 2, we discuss how to implement performance optimizations to get inferences on real-time data.

Overview of solution

In this post, we assume the role of a data analyst with SQL expertise working for a wireless provider. We’ve been tasked with identifying customers that are potentially at risk of leaving the service for a different provider (customer churn). We have access to aggregated and anonymized service usage and other customer behavior data stored in Aurora. We want to know if this data can help explain why a customer would leave. If we can identify factors that explain churn, then wireless providers can take corrective actions to change predicted behavior, such as running targeted retention campaigns.

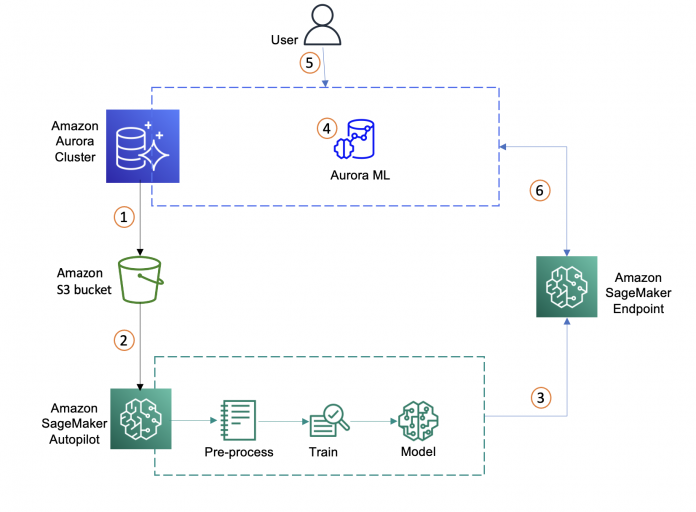

We start with identifying data in the Aurora database, exporting it to Amazon Simple Storage Service (Amazon S3), and using the data to set up an Autopilot experiment in SageMaker to automatically process the data, train the customer churn model, and deploy model to an endpoint. We then create a function in Aurora to call the endpoint and generate predictions in real time from the Aurora database. The following diagram illustrates this workflow.

Identify a dataset on the Aurora cluster and move data to S3 bucket

Create an ML model using SageMaker Autopilot with the imported dataset

Select the best ML model and deploy the SageMaker endpoint

Integrate Aurora with SageMaker

Create a SQL function using the SageMaker endpoint

Invoke the SageMaker endpoint through SQL query

Identify a dataset on the Aurora cluster

If you have an existing dataset in the Aurora cluster that you wish to use as the basis for your model then you can consider connecting to Amazon SageMaker Data Wrangler to do some expert curation and feature engineering and then train a model with Autopilot to get accurate inferences. To use an existing dataset from your Aurora cluster, skip to the next section Move Aurora data to the S3 bucket.

If you want to use our test dataset that has already been preprocessed, the following steps show how to load a dataset into an Amazon Aurora MySQL-Compatible Edition cluster and then make it available for SageMaker to create an ML model. We can create an AWS Cloud9 instance or set up the AWS Command Line Interface (AWS CLI) to access the AWS resources. For more information, see Setting up AWS Cloud9 and Set Up the AWS Command Line Interface (AWS CLI), respectively. For this post, we use an AWS Cloud9 instance. For pricing refer to AWS Cloud9 Pricing.

Standard pricing will apply for all the AWS resources used. For Aurora database, you will be charged for I/Os, compute and storage. For full pricing refer to Amazon Aurora Pricing. You pay for storing objects in your S3 buckets and for the underlying compute and storage resources used by SageMaker Autopilot and the endpoint deployed. For full pricing refer to Amazon SageMaker Pricing.

Our Aurora cluster needs to be set up to use LOAD DATA FROM S3, then we run the following SQL queries to create the mltest database and a table with customer features (columns) to use for predicting customer churn.

Connect to the Aurora MySQL cluster and following is a sample command:

Create a test database:

Create a sample table to load data:

Run LOAD DATA FROM S3 to load the data from the SageMaker sample S3 bucket to the Aurora MySQL cluster:

Move Aurora data to the S3 bucket

At this point, whether you are using our test data or you own data, we now have a dataset in Aurora that we want to use to create an ML model. So first we must load the data to S3 so it can be accessed by SageMaker. To load the data we can use the SELECT INTO OUTFILE S3 statement to query data from an Aurora MySQL DB cluster and save it directly into text files stored in an S3 bucket.

Before we can save data into an S3 bucket, we must first give our Aurora MySQL DB cluster permission to access Amazon S3. After setting the permissions, we run the following commands to load data to Amazon S3:

Read MoreAWS Database Blog