AWS Database Migration Service (AWS DMS) is used for multiple reasons, including migrating from on premises to AWS, migrating from Amazon Elastic Compute Cloud (Amazon EC2) to Amazon Relational Database Service (Amazon RDS), continuous replication from Amazon RDS to Amazon RDS, and continuous replication from Amazon RDS to Amazon Simple Storage Service (Amazon S3).

When using AWS DMS to migrate databases with high transactions across different tables, you have to split AWS DMS replication into multiple tasks, each including different tables based on transaction load to reduce replication latency. When doing so, you must disable foreign keys for the tables involved, because one task can replicate transactions faster than another. In this situation, the target database can never be assumed in a consistent state in terms of transactions if the source database is running and always changing. Staging a source downtime through stopping application writes on the database can achieve consistency on the target, but it’s always challenging and sometimes the process needs to be repeated multiple times for different copy requirements, which isn’t desirable.

In this post, we describe a method to achieve transaction consistency on the target database.

For our use case, we migrate an Oracle database on Amazon EC2 to Amazon Aurora PostgreSQL-Compatible Serverless.

Use case overview

The following example explains what happens if we stop AWS DMS tasks that are processing data at different times.

Our user schema has two tables:

PARENT_TABLE

CHILD_TABLE

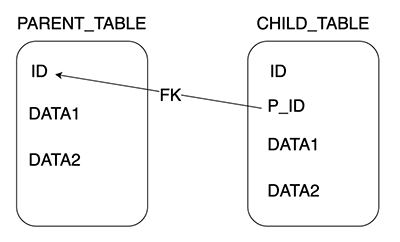

In the following diagram, the P_ID column in CHILD_TABLE is referencing PARENT_TABLE for the ID column. If a foreign key is enabled, the row should be inserted into PARENT_TABLE before it can exist in CHILD_TABLE.

For this example, we assume our Aurora PostgreSQL Serverless database and user already exist. Also, the full load was completed using an AWS DMS full load task, and we created two change data capture (CDC) only tasks. Foreign keys are disabled on the target because we’re splitting replication into multiple tasks.

The following are our CDC-only tasks:

dms-cdc-parent-table – This task replicates PARENT_TABLE only

dms-cdc-child-table – This task replicates CHILD_TABLE only

The following diagram illustrates this architecture.

The following screenshot shows our migration tasks on the AWS DMS console.

In this scenario, if the CHILD_TABLE task is running slower even by a few seconds (or milliseconds) compared to the PARENT_TABLE (high load on child_table compared to parent_table), the task can fail to replicate if foreign keys are enabled on the target. That is why in such scenarios, foreign keys must be disabled on the target.

For more information, refer to How can I troubleshoot an AWS DMS task that failed with a foreign key constraint violation error?

As part of any migration strategy, if we need to test the target database for functionality or performance, we can do so in the following ways:

Stop AWS DMS tasks and perform the test on the target database itself

Create a clone from the target database and use that clone to test

To achieve either option, the target database should be consistent to make sure that all the foreign keys are getting enabled. Simply stopping the AWS DMS tasks doesn’t guarantee consistency. However, AWS DMS allows you to specify a stop condition for your tasks based on a specific commit time, which guarantees consistency. To learn more about this parameter, refer to Creating tasks for ongoing replication using AWS DMS.

Solution overview

To achieve consistency on our target database, we use two options provided by AWS DMS: server stop time and commit stop time.

Server stop time is the time on the replication instance. For example, if you provide 19:30 as the server stop time, AWS DMS makes sure that task stops when the server time on the replication instance is 19:30.

You can use server stop time in cases where you need to stop AWS DMS at an exact server time (replication instance time). For example, if a job is starting a data load at 19:30 and you want AWS DMS to stop before the data load happens, you can schedule AWS DMS to stop at 19:29 server time.

Commit stop time is the time of the transaction commit on the source server. For example, if you provide 19:30 as the commit stop time, AWS DMS makes sure that the task stops after it reads through all the transactions that were committed on or before 19:30.

You can use the commit stop time to stop AWS DMS after it finishes reading transactions that were committed at a specific time. This helps to make sure AWS DMS reads transactions committed until the time provided. For example, if AWS DMS is lagging by 10 minutes and you provide 19:30 as the commit stop time, AWS DMS makes sure to read all the transactions that committed until 19:30 and then stops, which means AWS DMS might stop around 19:40, which is a 10-minute lag, but it made sure that it read transactions committed until 19:30.

In the following sections, we walk through how to specify commit stop time via either the AWS DMS console or the AWS Command Line Interface (AWS CLI).

Prerequisites

For this walk-through, you should have following setup already in place:

Source database (for this example, we’re using Oracle on Amazon EC2)

Target database (for this example, we’re using Aurora PostgreSQL Serverless)

AWS CLI and AWS DMS

AWS DMS CDC replication in place with multiple tasks configured

Specify commit stop time using the AWS DMS console

To implement the solution via the AWS DMS console, complete the following steps:

On the AWS DMS console, choose Database migration tasks in the navigation pane.

Open your migration task.

On the Actions menu, choose Modify.

Select Specify commit stop time.

Enter your desired stop time.

Start the migration task.

The task automatically stops when it reaches the transaction point corresponding to 19:30:00 of the specified date. This can be hours after the actual stop time, depending on the lag.

In the following screenshot, the task was modified to stop at commit stop time of 15:30:00, but it stopped at 15:30:15 instead. There was a delay of 15 seconds when the task processed the source transaction, which happened at 15:30:00.

As shown in the following screenshot, both tasks were modified to stop at 15:30:00. One stopped at 15:30:41, whereas the other stopped at 15:31:54. Although both stopped at different times, they stopped at the same commit timestamp of the source database, making the target database consistent as of 15:30:00.

Specify commit stop time with the AWS CLI

You can use the AWS CLI to achieve the same configuration. This can be useful if you’re using automation using cron or other AWS services to stop the task every day with the timestamp setting.

Use the following code :

For example:

When you start the task, you see the same notification for CdcStopPosition:

After both AWS DMS tasks are stopped, we can check the count of parent_table and child_table on the target Aurora PostgreSQL database. It should be the same because we achieved transaction consistency. At this point, we can enable the foreign key constraints without any issues.

Another way to verify that both tasks stopped at the same source checkpoint time is to check the recovery checkpoint of both tasks. If you see the following output for both AWS DMS tasks, the recovery checkpoint is the same for both, which proves that both stopped reading at the same transaction time as the source:

Clean up

The services involved in this solution incur costs. To avoid additional costs, clean up the example resources you created while running this solution. For instructions, refer to Deleting a replication instance and Deleting a DB instance.

Summary

In this post, we provided step-by-step instructions to stop multiple AWS DMS tasks such that the target database can be consistent with the source database at the specified server time or commit time.

We encourage you to try this solution and take advantage of creating a consistent clone from your target database (or perform directly on the target database) to run functional or performance tests before go-live. Take a moment to leave your comments on this post and reach out if you have any questions or feedback.

About the authors

Jeemy Patel is a Database Consultant with the Professional Services team at Amazon Web Services. Jeemy helps customer with migration to AWS, performance optimization as well as provide technical guidance on various Disaster Recovery solutions for Amazon customers

Prashanth Ramaswamy is a Senior Database Consultant with the Professional Services team at Amazon Web Services. Prashanth focuses on leading the database migration efforts to AWS as well as provide technical guidance including cost optimization, monitoring and modernization expertise to Amazon customers.

Saumya Mula is a Senior Database Consultant with the Professional Services team at Amazon Web Services. She provides overall guidance on database migrations from on-premises to AWS along with automation , cost management and performance tuning of the critical production systems for Amazon customers.

Read MoreAWS Database Blog