When you decide to run applications across multiple geographic regions, there are many questions you need to answer. First, what are the business drivers? Resiliency? It might turn out that your application requirements for resiliency, such as availability and disaster recovery, can be satisfied within a single AWS Region. If you do need a multi-region solution, you need to consider questions such as how to:

Keep your critical data synchronized and consistent across those regions.

Maintain scalability, reliability, and performance of your applications as data volume and user traffic increase.

Operate without degradation even under failure scenarios.

Manage your solution with minimal operational overhead.

Implementing a multi-region strategy can be a daunting task, and some of the biggest challenges have to do with data. In this post, we start from the beginning with how to approach building your multi-region strategy—identifying drivers, with emphasis on resiliency as an integral part of the strategy. We then describe approaches to building resilient, multi-region applications on Amazon Web Services (AWS) using AWS Regions. Finally, we describe how you can use Amazon DynamoDB and its proven multi-Region data replication capabilities to help solve these challenges. We show an incremental approach to evolving your data architecture from a single Region to multiple Regions.

Whether you’re new to DynamoDB or already running a DynamoDB-backed application, this series of posts will provide you with a guide to think big, start small, and scale at your own pace as your business needs grow beyond a single geographic and AWS Region.

Business (and other) drivers for a multi-Region deployment

Before you embark on your multi-Region journey, you must carefully consider the business drivers to run your applications and databases across multiple Regions. Here are the reasons we often hear from AWS customers:

Resiliency (high availability and disaster recovery) – Natural disasters, hardware failures, software bugs, and human errors can cause lapses in business operations, which can negatively impact your business. You need a disaster recovery solution in place for your business to continue to operate under failure conditions. This solution will protect your business from lost sales opportunities, legal implications, negative impact to brand, and compromised customer trust. You also might need a higher availability than the 99.99% (four nines) that can be achieved within a single Region. If you need 99.999% (five nines) availability, you must use at least two Regions.

Geographic expansion – If your organization has decided to expand to new geographic regions, countries, or even continents, your applications need to run closer to where your users are to provide faster response times and a great customer experience.

AWS customers often have resiliency as the primary driver and then evolve to geographic expansion. Even if your primary driver is geographic expansion, you should still consider resiliency.

As a best practice, your multi-Region strategy should always include a resiliency strategy. More broadly, resiliency should be a fundamental consideration, much like security, in building all applications that are important to your business.

For a comprehensive review of the topic of resiliency as you build your applications on AWS, refer to Reliability Pillar – AWS Well-Architected Framework and Disaster Recovery of Workloads on AWS: Recovery in the Cloud.

In addition to your business drivers, you must consider compliance requirements that might affect your ability to replicate data to other Regions. For example, security concerns and concerns about government requests for data have contributed to a focus on keeping data within a geopolitical or legal entity, such as a country or a broader entity. One example is the European Union’s General Data Protection Regulation (GDPR) for the protection and privacy of personal data in the European Union.

After you’ve determined your business drivers, you can work with both business and IT teams to identify and analyze the applications that are critical to your business. For example, you might decide that your customer-facing applications are more important than a post-trade market analysis or chatbots. In some cases, you might also need to make some trade-off decisions within an application. For example, your e-commerce system must prioritize the ability to process purchase transactions over processing clickstreams.

The key is to start with the most important business functions, review all the steps that provide value to your users, and identify each function’s dependency tree. The dependency tree is comprised of all applications, services, and databases that are necessary to perform that function. You then need to consider the business impact of failure in any of the steps. Therefore, for each step, you need to define availability objectives and disaster recovery objectives for system downtime—recovery time objective (RTO), and data loss—recovery point objective (RPO). These objectives are defined based on the criticality and potential impact of each failure. The applications are grouped in tiers based on the potential impact on the business if there is a disruption. Each tier has a set of defined RPOs, RTOs, and availability metrics, beginning with Tier 1 applications as the most critical. For example, an organization might classify their applications as follows:

Tier 1 – 2 hours RTO, 30 seconds RPO, 99.999% of availability

Tier 2 – 8 hours RTO, 4 hours RPO, 99.9% of availability

Tier 3 – 24 hours RTO, 24 hours RPO, 98% of availability

Tier 4 – 48 hours RTO, 48 hours RPO, 95% of availability

In this example, Tier 1 applications are those considered vital to the operations of this organization, such as order processing or customer relationship management. Disruption of some Tier 1 applications might also impact business partners and beyond.

There are several techniques you can use to analyze and prioritize your applications and services. One technique you might find useful is called failure mode and effects analysis (FMEA). FMEA is an International Organization for Standardization (ISO) engineering technique used to capture and prioritize risks based on severity, probability, and detectability, where each is rated on a 1–10 scale. You multiply these three dimensions to estimate a risk priority number (RPN) between 1–1,000. An extremely low-probability, low-severity, easy-to-detect risk has an RPN of 1. An extremely frequent, permanently damaging, impossible-to-detect risk has an RPN of 1,000. For an example, review Appendix F of Building Mission-Critical Financial Services Applications on AWS.

Resiliency and multi-Region strategies on AWS

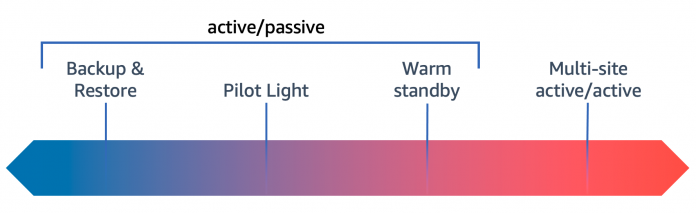

Your resiliency strategy should prioritize applications and services that have the largest impact on cost and business risks. For example, your Tier 1 applications will require a multi-Region solution to satisfy the availability requirement of five nines. The RTO and RPO for your applications will inform your decision of which of the following disaster recovery approaches to select: backup and restore, pilot light, warm standby, or active/active (as shown in Figure 1 that follows).

Figure 1: Disaster recovery approaches from lowest priority to mission critical

RPO/RTO:

Hours

RPO/RTO:

10s of minutes

RPO/RTO:

Minutes

RPO/RTO:

Real-time

Lower priority use cases

Provision all AWS resources after event

Restore backups after event

Cost $

Data live

Services idle

Provision some AWS resources and scale after event

Cost $$

Business critical services

Always running, but smaller

Scale AWS resources after event

Cost $$$

Mission-critical services

Zero downtime

Near zero data loss

Cost $$$$

On one end of the spectrum, the active/passive backup and restore strategy is the simplest and has the lowest cost, but the highest RTO and RPO. On the other end, the active/active strategy has the lowest RTO and RPO, but the highest cost and complexity. For more information, see Disaster recovery options in the cloud.

Evolutionary approach: From a single Region to multiple Regions

To get started, choose one of the business-critical applications you identified earlier. Your multi-Region strategy should be closely aligned with your business goals and objectives. In our experience, it helps to build a roadmap that describes your evolution from a single Region to multiple Regions. It’s better to start small, measure, and determine which areas you need to improve before you decide to scale.

Let’s say that your end goal is to implement an active/active multi-Region strategy. Instead of tackling the application as a whole, you could take a particular use case—a vertical slice of your architecture—and gradually implement the requirements of the active/passive pilot light strategy. You can then build on the lessons you learned and the processes you automated to implement the more complex requirements of the warm standby strategy. From that point, you can continue to iterate, measure, learn, and automate. With that strong foundation of automated design patterns and best practices, you can then proceed to implement the requirements of the multi-site active/active strategy, which is the most complex architecture.

With this approach, you can move towards your end goal by running small experiments that quickly produce measurable outcomes, one iteration at a time. During each of these experiments, we recommend using the AWS Well-Architected Framework to iteratively implement the best practices and core strategies for designing and operating reliable, secure, efficient, and cost-effective applications on AWS. This framework is based on the years of experience AWS has architecting thousands of solutions across a wide variety of business verticals and use cases.

Thanks to the AWS global infrastructure, you can incrementally build, measure, and learn after each experiment in a cost-effective manner. You can provision only the resources that you need, knowing you can instantly scale up or down along with the needs of your business. This also reduces cost and improves your ability to meet your users’ demands.

As of this writing, AWS has a global network that spans 99 Availability Zones within 31 geographic regions around the world, with announced plans for 12 more Availability Zones and 4 more AWS Regions in Canada, Israel, New Zealand, and Thailand.

Why DynamoDB?

DynamoDB is a fully managed, serverless database that delivers low latency at any scale and allows you to manage capacity elastically, in real time. Hundreds of thousands of AWS customers across different industries have chosen DynamoDB for their mission-critical and other applications. Financial services, commerce, AdTech, Internet of Things (IoT), and gaming applications (to name a few) rely on DynamoDB to store trillions of items, return results in single-digit milliseconds, and manage millions of requests per second from millions of users and smart devices around the world.

For example, DynamoDB powers multiple high-traffic Amazon properties and systems including Alexa, the Amazon.com sites, and all Amazon fulfillment centers. During Amazon Prime Day 2022, these systems made trillions of calls to the DynamoDB API, peaking at 105.2 million requests per second, while DynamoDB maintained high availability and delivered single-digit millisecond responses. With DynamoDB, you too can build this kind of any-scale application with minimal effort.

Experienced database professionals know that scaling the capacity of a production database is cumbersome. It could impact the performance of your applications, and might even result in downtime. Moreover, depending on the underlying database technology, scaling can take several hours or even days. DynamoDB allows you to change your table’s capacity on the fly. You can easily increase or decrease capacity based on the application traffic patterns. What’s more, the cross-Region replication capability of global tables is built in and fully managed by the service. Let’s take a closer look at DynamoDB capabilities, starting with a single Region.

DynamoDB in a single Region

If you’re new to DynamoDB, the best way to get started is to create a DynamoDB table in a single Region. AWS documentation provides several examples and tutorials to help you develop DynamoDB-backed applications. When used in a single Region, DynamoDB provides the following benefits:

Performance and scalability – DynamoDB can handle more than 10 trillion requests per day and can support peaks of more than 20 million requests per second with minimal database management. It performs as expected with little variation in response time as defined by your table’s capacity mode. DynamoDB has two capacity modes for processing reads and writes on your tables:

Provisioned mode – With provisioned mode, you specify the number of reads and writes per second that you expect your application to require. Provisioned mode is more cost-effective when you’re confident you will have decent utilization of the provisioned capacity you specify. You can also use auto scaling to adjust your table’s provisioned capacity automatically in response to traffic changes, which lets you stay at or below a defined request rate in order to obtain cost predictability.

On-demand mode – With on-demand mode, DynamoDB takes care of managing capacity for you. You don’t need to specify how much read and write throughput you expect your application to perform. It instantly accommodates your applications as they ramp up or down. On-demand mode is ideal for applications that are less predictable and when you’re unsure that you will have high utilization—you only pay for what you consume.

High availability and durability – DynamoDB was designed with resiliency in mind. It provides high availability and durability with a 99.99% uptime service level agreement (SLA) within a single Region. Your data in DynamoDB is reliably stored to solid-state disks (SSDs) and automatically replicated across three Availability Zones in a Region. DynamoDB also automatically spreads the data and traffic for your tables over a sufficient number of servers to handle your throughput and storage requirements, while maintaining fast performance and consistency. Speaking of consistency, DynamoDB supports three models of read consistency: eventually consistent, strongly consistent, and transactional.

Backup and restore – To support your data resiliency and backup needs, DynamoDB provides two types of fully managed backups:

On-demand backup – You can use on-demand backups to create full backups of your tables for long-term data archival and compliance. These backups are retained until explicitly deleted. Regardless of table size, backups complete in seconds. You can back up and restore your table data anytime, from the AWS Management Console or through the AWS API, with no impact on table performance or availability. If you prefer, you can use DynamoDB integration with AWS Backup (see Using AWS Backup with DynamoDB) to create backup plans with schedules and retention policies for your tables, and even with cross-account and cross-Region copying.

Point-in-time recovery – Point-in-time recovery protects your data from accidental write or delete operations due to application errors. DynamoDB maintains incremental backups of your table so you don’t have to worry about creating, maintaining, or scheduling on-demand backups. You can restore data up to any point in time within the last 35 days. In case of accidental writes or deletes, you can recover your original data by identifying the item keys, restoring the table to the last known good point in time, and selectively reading the correct data from the restored table and writing it to your production table.

Security and compliance – Security is a shared responsibility between AWS and your organization, as described in the AWS shared responsibility model. DynamoDB protects user data stored at rest, data in transit between clients and DynamoDB, and between DynamoDB and other AWS resources that reside in the same Region. Also, your company has complete control of who can be authenticated and authorized to use DynamoDB resources. Your compliance responsibility when using DynamoDB is determined by the sensitivity of your data, your organization’s compliance objectives, and applicable laws and regulations. AWS works with third-party auditors to assess the compliance of DynamoDB as part of multiple AWS compliance programs, including System and Organization Controls (SOC), Payment Card Industry (PCI), Federal Risk and Authorization Management Program (FedRAMP), and the Health Insurance Portability and Accountability Act (HIPAA), among others. For an up-to-date list of AWS services in scope of specific compliance programs, refer to AWS Services in Scope by Compliance Program. For more details on how to configure DynamoDB to meet your security and compliance objectives, refer to Security and compliance in Amazon DynamoDB.

DynamoDB in multiple Regions: Global tables

DynamoDB uses the AWS global backbone to provide you with fully managed, multi-Region, multi-active data replication, through a feature called global tables. A global table consists of multiple replica tables, one per Region, as shown in Figure 2 that follows. Every replica has the same table name and the same primary key. You can create a global table using the console, through the AWS Command Line Interface (AWS CLI), or the AWS SDK. The current version of global tables, version 2019.11.21, allows you to convert a single-Region table to a global table.

Figure 2: One global table replicated across three Regions

In addition to the benefits of a single-Region DynamoDB table, global tables provide you with the following unique benefits:

Global data access with local reads and writes – Global tables enable you to read data from and write data to any Region. DynamoDB replicates your data asynchronously to other Regions, typically within 1 second. Data replication doesn’t impact the performance of your application writes. With a global table, each replica table stores the same set of data items, and your data is eventually consistent in all Regions. While your application can perform strongly consistent reads in the same Region, the reads of data replicated from other regions of your global table are always eventually consistent, due to asynchronous nature of data replication. Transactional operations provide ACID guarantees only in the Region where the write occurs originally.

Resiliency – Global tables provide a 99.999% uptime SLA and allow you to build disaster-proof solutions with multi-Region resiliency. Your application can implement custom logic to detect when a global table’s Region becomes isolated or degraded in order to redirect reads and writes to a different Region. In addition, DynamoDB tracks any writes that have been performed but haven’t yet been propagated to other Regions. If, for some reason, the communication gets interrupted, DynamoDB propagates any pending writes when the Region comes back online.

Conflict resolution – Write conflicts can occur when writes to the same item in a global table are made simultaneously in two different Regions. To ensure data consistency, DynamoDB global tables use a last-writer-wins conflict resolution mechanism, so all the replica tables agree on the latest update and converge toward a state in which they all have identical data.

Operational efficiency – Global tables eliminate the difficult work of replicating data so you can focus on your application’s business logic. You can monitor DynamoDB using Amazon CloudWatch (see DynamoDB Metrics and dimensions) and track global tables replication delays using the ReplicationLatency metric. ReplicationLatency is expressed in milliseconds and is emitted for every source-Region/destination-Region pair.

From a cost perspective, you pay the usual DynamoDB prices for read capacity and storage, along with data transfer charges for cross-Region replication. Write capacity is billed in terms of replicated write capacity units. Refer to Amazon DynamoDB pricing for more details.

Conclusion

In this first installment of the series of posts on accelerating your multi-Region strategy with DynamoDB, we discussed how to approach building a multi-Region strategy, starting with identifying drivers, prioritizing applications and services, and defining resiliency objectives. We emphasized the importance of resiliency as an integral part of a good multi-Region strategy, and covered the disaster recovery approaches on AWS. Finally, we introduced DynamoDB as a fully managed, serverless database service that provides multi-active, multi-Region capabilities, and described its capabilities in both single and multi-Region contexts.

The next installment of the series will focus on multi-Region strategies and use cases using DynamoDB.

About the Authors

Edin Zulich leads a team of Database solutions architects at AWS. He has helped many customers in all industries design scalable and cost-effective solutions to challenging data management problems. Edin has been with AWS since 2016, and has worked on and with distributed data technologies since 2005.

Guillermo Tantachuco is a Principal Solutions Architect at AWS, where he works with Financial Services customers on all aspects of software delivery and internet-scale systems, including application and data architecture, DevOps, defense in-depth, and fault tolerance. Since 2011, he has led the delivery of cloud-native and digital transformation initiatives at Fortune 500 and global organizations. He is passionate about family, business, technology, and soccer.

Read MoreAWS Database Blog