Whether you are building your very first pipeline or you’re an old pro, these best practices for building data pipelines can help you make pipelines that are easy to understand and therefore easy to maintain and extend.

Design Data Pipelines for Simplicity

Reduce complexity in the design wherever possible. This is a concept borrowed from software development. When reviewing your pipeline design, ask yourself is it possible to do what you’re trying to do with fewer processors? If the answer is yes, remove the extra and consolidate. Doing so makes pipelines easier to maintain in the future. With such a visual tool, simplicity makes it possible to understand what a pipeline is trying to accomplish with a single glance. Counterintuitively, It often takes more time and thought to develop a simple design than a complex one.

Consider breaking up pipelines into separate functions. For example, perhaps use one pipeline for loading into your data warehouse and another for transforming the data and moving it into your reporting schema. Breaking up pipelines like this means that it is easy to spot and correct issues with your pipelines and future maintenance is easier because the objective of each pipeline is more obvious. Simple is better.

Labeling and Naming Conventions

Labels are important in a few ways. First, individual processors should be descriptive and unambiguously named. You should be able to tell just by looking at the name of a processor what that processor is doing. Secondly, field names should follow the same convention. Naming fifteen new fields, field1, field2, field3, etc., is a quick way to lose track of what a pipeline is trying to accomplish. Finally, make use of notes on the pipeline canvas on very complex pipelines to point out important features that might otherwise be missed to other collaborators or yourself when going back through your pipeline months into the future.

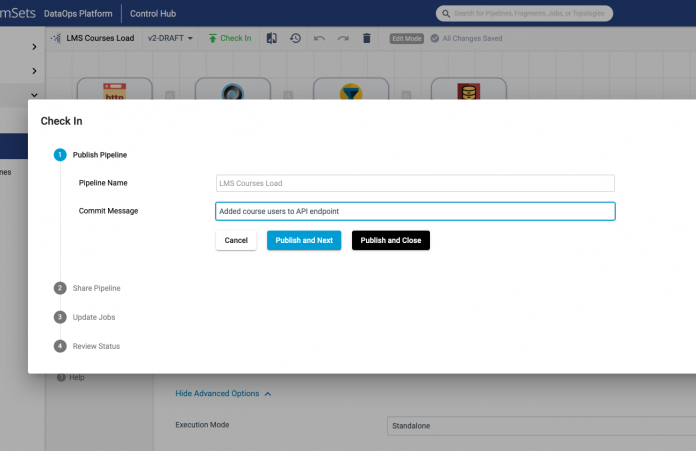

Checking in Data Pipelines and Commit Messages

The best practice for checking in pipelines is to do it early and often. There is sometimes a fear that checking in should only be done when the pipeline is ready for final review, but the reality is that changes might need to be rolled back to any point in the process regardless of how long ago they might have been made. Check in after each significant change and craft short and clear commit messages with titles that will help you select the correct draft in the event that a rollback is needed.

Testing Your Pipelines

Make use of the preview button and test often. Make sure to unselect the checkbox to Write to Destinations and Executors if you’re not quite ready to send your data to your final landing zone. Test individual processors and as you develop by using the trash processor to view how the data is composed as it flows through the pipeline. These are great features of StreamSets and can be relied on to make development work more efficient.

Use Data Parameters Wisely

Reuse helpful fields like table names and schemas using Parameters. No sense retyping these frequently used fields over and over. Make sure never to include passwords or other sensitive information as parameters, because these will be hidden when exporting your pipelines. Use Connections or the secrets manager of your choice instead for sensitive fields.

The post 5 Best Practices for Building Data Pipelines appeared first on StreamSets.

Read MoreStreamSets