Many customers use AWS Backup to automatically create Amazon Relational Database Service (Amazon RDS and Aurora) database snapshots. RDS database snapshots are a convenient way to protect your data and make it easy to recover in the event of an accident or disaster. If you’re using RDS Snapshots for long-term archival to meet compliance requirements rather than for point-in-time recovery, it’s essential to consider cost-saving measures. Utilizing cold storage for data that isn’t frequently accessed can be a way to reduce storage costs. However, AWS Backup doesn’t offer this option for RDS database snapshots at the time of this writing.

In this post, I show you a serverless event-driven solution that integrates AWS Backup with the Amazon RDS export feature to automate export tasks and enables you to query the data using Amazon Athena without provisioning a new RDS instance.

This solution is not a replacement for snapshot feature which provides point-in-time backup/restore of your database. You cannot restore RDS snapshot exports directly to an RDS or Aurora database, but you can query Parquet files using Athena. This makes this approach a good fit for long-term archival of data on low-cost storage. Although this post walks you through automating the export from a snapshot for Amazon RDS databases, this solution will also work for Amazon Aurora with the same benefits.

Amazon RDS can export data to an Amazon Simple Storage Service (Amazon S3) bucket by extracting data from the snapshot. This export feature is supported by RDS for MariaDB, MySQL and PostgreSQL for specific engine versions. All currently available Aurora MySQL and PostgreSQL engine versions also support exporting DB cluster snapshot data to Amazon S3.

It has many cost benefits:

It stores only the data content, and not the whole volume in an S3 bucket.

It can store the data in Parquet format, which is a compressed columnar storage format optimized for big data processing. It’s designed to improve performance and reduce storage costs compared to other formats like CSV.

You can archive the exported data to cold storage such as Amazon S3 Glacier or Glacier Deep Archive.

Currently, the Amazon RDS snapshot export feature is not integrated with AWS Backup. However, you can automate it using Amazon EventBridge and AWS Lambda.

Overview of solution

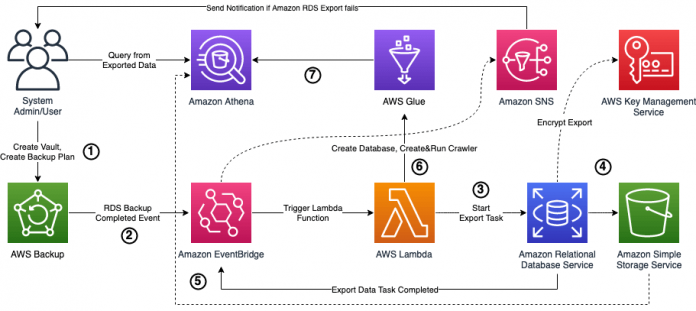

The following diagram illustrates the architecture of the solution.

Let’s go through the steps shown in the diagram above:

You create an AWS Backup backup plan which will put database snapshots to the vault created by the technical solution.

In this solution, I use AWS Backup as a signal source for an EventBridge rule.

The EventBridge rule triggers an AWS Lambda function which starts export task for the database. This solution uses AWS Key Management Service (AWS KMS) to encrypt the database exports in Amazon S3.

This solution uses Amazon Simple Storage Service (Amazon S3) to store the database exports.

The EventBridge rule triggers a Lambda function when the export task is completed. Another EventBridge rule uses Amazon Simple Notification Service (Amazon SNS) to send email if export task fails.

The Lambda function uses AWS Glue to create a database, crawler and runs it.

After the crawler successfully runs, you can use Amazon Athena to query the data directly in Amazon S3.

If you don’t need to query data export using Athena, then you can choose to skip the creation of resources for step 5, 6, and 7 when deploying the AWS CloudFormation template.

Create solution resources with AWS CloudFormation

To get started, create the solution resources using a CloudFormation template:

You can download the CloudFormation template YAML file from the GitHub repository or launch the CloudFormation stack by choosing Launch Stack.

For Stack name, enter a name.

For KMS Key Configuration, choose if you want a new KMS key to be created as part of this solution. If you already have an existing KMS key that you want to use, choose No.

If you choose No for KMS key creation, it is mandatory to enter a valid KMS key ID to be used by the solution. You need to configure key users manually after the solution deployed. Leave this field blank if you chose Yes for KMS Key Configuration.

Under RDS Export Configuration, enter a valid email address to receive notification when an S3 export task failed.

You can enter schema, database, or table names if you want only specific objects to be exported in comma-separated list. Otherwise, leave this field blank for all database objects to be exported. Otherwise, leave this field blank for all database objects to be exported. You can find more details about this parameter in the AWS Boto3 documentation.

If you choose Yes, the solution will make exports automatically available in Athena.

Choose Next.

Accept all the defaults and choose Next.

Acknowledge the creation of AWS Identity and Access Management (IAM) resources and choose Submit.

The stack creation starts with the status Create in Progress and takes approximately 5 minutes to complete.

On the Outputs tab, take note of the following resource names:

BackupVaultName

IamRoleForGlueService

IamRoleForLambdaBackupCompleted

IamRoleForLambdaExportCompleted

SnsTopicName

If you decided to use an existing KMS key, you need to give the IAM roles you took note of in step 11 access to your existing KMS key. You can do that by using the AWS Management Console default view or policy view.

Check your email inbox and choose Confirm subscription in the email from Amazon SNS. Amazon SNS opens your web browser and displays a subscription confirmation with your subscription ID.

Now you’re ready to create an RDS for Maria DB instance with pre-loaded data, run an on-demand backup job, and query the exported data using Athena.

Create an RDS for MariaDB instance

You create an RDS for MariaDB instance running on db.t4g.micro with 20 GB GP3 Amazon Elastic Block Store (Amazon EBS) storage. The CloudFormation template creates a sample database with pre-loaded data after creating the RDS instance. You can skip this step if you already have an RDS instance with data in it that supports the export snapshots to Amazon S3 feature.

Launch the CloudFormation stack by choosing Launch Stack.

For Stack name, enter a name.

For Choose VPC, choose the VPC in which the test RDS for MariaDB instance will be created.

For Choose Subnet, choose the subnet in which the test RDS for MariaDB instance will be created.

For Master username, enter the master username for the test RDS for MariaDB instance will be created.

Choose Next.

Accept all the defaults and choose Next.

Acknowledge the creation of IAM resources and choose Submit.

The stack creation starts with the status Create in Progress and takes approximately 10 minutes to complete.

On the Outputs tab, take note of the RDS instance name.

Create an on-demand backup with AWS Backup

To create an on-demand backup, which simulates a periodic backup, complete the following steps:

On the AWS Backup console, choose Dashboard in the navigation pane.

Choose Create on-demand backup.

For Resource type, select RDS.

For Database name, select the RDS instance you just created using the CloudFormation template.

Select Create backup now.

Set the Retention period to 1 day, because you don’t need it after you complete the test.

For Backup vault, select the vault created by the CloudFormation template.

Leave all other parameters as is and choose Create on-demand backup.

Monitor the backup job

You can monitor the on-demand backup on the AWS Backup console:

On the AWS Backup console, choose Jobs in the navigation pane. You can see the backup corresponding to the resource ID.

Wait until the job is complete (choose the refresh icon periodically until you see the status change to Complete). The job make take up to 15 minutes.

Take note of the backup job ID.

Monitor the export task

To monitor the export task that is created after the backup job is finished, complete the following steps:

On the Amazon RDS console, choose Exports in Amazon S3 in the navigation pane.

Enter the backup job ID that you noted previously to search for this job.

Choose Snapshot as the source type.

You can see the export task corresponding to your backup job ID.

Wait until the export task is complete (choose the refresh icon periodically until you see the status change to Complete). The export task may take up to 30 minutes.

Monitor the AWS Glue crawler

Skip this step if you chose not to make exports automatically available in Athena. To monitor the AWS Glue crawler that is created after the export task is finished, complete the following steps:

On the AWS Glue console, choose Crawlers in the navigation pane.

Search for a crawler with the naming format rds_<engine_type>_<rds_instance_id>_<timestamp_of_snapshot>_crawler.

Wait until the crawler is complete (choose the refresh icon periodically until you to see the status change to Succeeded).

It may take up to 2 minutes for the crawler to crawl the test database. This duration depends on the data size.

Query data using Athena

Skip this step if you chose not to make exports automatically available in Athena. To query the database using Athena, complete the following steps:

On the Athena console, navigate to the query editor.

If you’re running a query from Athena for the first time, you need to specify a query result location using the Athena console.

For Database, choose your database (name format rds_<engine_type>_<rds_instance_id>_<timestamp_of_snapshot>).

For Tables, choose the options menu (three dots) for the mydatabase table.

Choose Preview Table to query the first 10 records in the table.

You can see the records in the Results pane.

Compare costs between using an RDS database snapshot and exporting to Amazon S3

Following cost comparisons assume the database metrics and settings listed below:

Database storage size – 50 GB

Data size – 43 GB

Daily change – 2%

Monthly change – 34.89 GB (81.14% monthly growth rate)

Backup period – Monthly

Backup retention – 2 years

The first 50 GB of RDS database snapshot storage will not incur charges, assuming no other RDS databases exist in the specified AWS account and region. Any snapshot storage over 50 GB will incur a monthly fee of $0.095 per GB. This rate is based on the eu-west-1 region.

I compared costs by creating an Amazon RDS for PostgreSQL instance with a new database, 6 tables, and 25 columns each, along with 10 million records per table. The estimated storage cost for 2 years using RDS database snapshots is $1,008.70, due to the cumulative monthly data growth.

Alternatively, using the Amazon S3 export feature for data archival instead of full database image archival yields significant cost savings. By exporting the database data in Parquet format, each export is 22.3 GB in size and incurs a monthly fee of $0.47 ($0.011 per processed GB). The AWS Glue crawler charges $0.44 per DPU, with a monthly cost of $0.06 based on 0.132 DPU used for each export. This comparison assumes you make a full scan for each export every month. Athena charges $5 per TB of data scanned. 22.3 GB yields $0.11/month cost. The costs associated with Lambda and AWS KMS have not been taken into consideration because they’re negligible. The main contributor to cost with this approach is S3 Standard storage, which you can further reduce by moving data to the S3 Standard-Infrequent Access storage class after 1 month and to S3 Glacier Instant Retrieval after 2 months. This cost estimation assumes you will retrieve the entire 22.3 GB of data every month. The following chart shows the costs of the different backup approaches.

The following table summarizes our cost comparison.

.

RDS Database Snapshot

Amazon S3 Export with S3 Standard

Amazon S3 Export with S3 Standard-Infrequent Access

S3 Export with S3 Glacier Instant Retrieval

2 years’ total cost including other service costs

$1,008.07

$169.23

$104.34

$58.18

Clean up

To avoid incurring future charges, delete the resources you created:

On the AWS Backup console, delete the recovery points.

On the Amazon S3 console, empty the S3 bucket created by the CloudFormation template to store the RDS database exports.

On the AWS CloudFormation console, delete the stacks that you created for the solution and RDS instance.

Conclusion

In this post, I introduced a technical solution that can help you store your all RDS or Aurora database exports on Amazon S3 periodically and make them available on Athena. This solution can work for all RDS or Aurora database snapshots taken using AWS Backup, which uses the backup vault created by the CloudFormation template.

Before you use this solution, ensure your RDS instance supports exporting snapshots to Amazon S3. There might be cases when tables or rows can be excluded from the export because using incompatible data types. Review the feature limitations for RDS and Aurora, test the data consistency between the source database and the exported data from Athena.

About the Author

Burak Alakus is Sr. Technical Account Manager at AWS with over 15 years of IT experience. He is passionate about utilizing his coding and automation skills to tackle any problem that comes his way. Away from work, he enjoys playing the guitar and keyboard, and jamming with friends.

Read MoreAWS Database Blog