AWS customers are increasingly using computer vision (CV) models for improved efficiency and an enhanced user experience. For example, a live broadcast of sports can be processed in real time to detect specific events automatically and provide additional insights to viewers at low latency. Inventory inspection at large warehouses capture and process millions of images across their network to identify misplaced inventory.

CV models can be built with multiple deep learning frameworks like TensorFlow, PyTorch, and Apache MXNet. These models typically have a large input payload of images or videos of varying size. Advanced deep learning models for use cases like object detection return large response payloads ranging from tens of MBs to hundreds of MBs in size. Large request and response payloads can increase model serving latency and subsequently negatively impact application performance. You can further optimize model serving stacks for each of these frameworks for low latency and high throughput.

Amazon SageMaker helps data scientists and developers prepare, build, train, and deploy high-quality machine learning (ML) models quickly by bringing together a broad set of capabilities purpose-built for ML. SageMaker provides state-of-the-art open-source serving containers for XGBoost (container, SDK), Scikit-Learn (container, SDK), PyTorch (container, SDK), TensorFlow (container, SDK) and Apache MXNet (container, SDK).

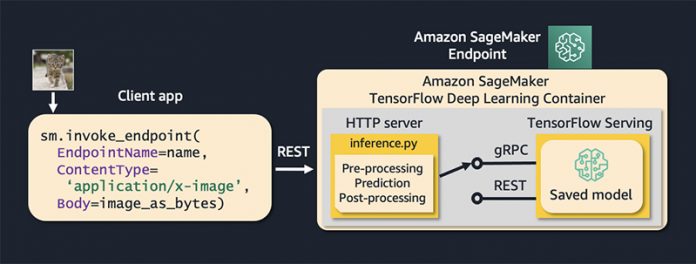

In this post, we show you how to serve TensorFlow CV models with SageMaker’s pre-built container to easily deliver high-performance endpoints using TensorFlow Serving (TFS). As with all SageMaker endpoints, requests arrive using REST, as shown in the following diagram. Inside of the endpoint, you can add preprocessing and postprocessing steps and dispatch the prediction to TFS using either RESTful APIs or gRPC APIs. For small payloads, either API yields similar performance. We demonstrate that for CV tasks like image classification and object detection, using gRPC inside of a SageMaker endpoint reduces overall latency by 75% or more. The code for these use cases is available in the following GitHub repo.

Models

For image classification, we use a Keras model MobileNetV2 pre-trained with 1,000 classes from the ImageNet dataset. The default input image resolution is 224*224*3 and output is a dense vector of probabilities for each of the 1,000 classes. For object detection, we use a TensorFlow2 model EfficientDet D1 [alternative URL: https://tfhub.dev/tensorflow/efficientdet/d2/1] pre-trained with 91 classes from the COCO 2017 dataset. The default input image resolution is 640*640*3, and the output is a dictionary of number of detections, bounding box coordinates, detection classes, detection scores, raw detection boxes, raw detection scores, detection anchor indexes, and detection multiclass scores. You can fine-tune both models by a transfer learning task on a custom dataset with SageMaker, and use SageMaker to deploy and serve the models.

The following is an example of image classification.

The following is an example of object detection.

Model deployment on SageMaker

The code to deploy the preceding pre-trained models is in the following GitHub repo. SageMaker provides a managed TensorFlow Serving environment that makes it easy to deploy TensorFlow models. The SageMaker TensorFlow Serving container works with any model stored in TensorFlow’s SavedModel format and allows you to add customized Python code to process input and output data.

We download the pre-trained models and extract them with the following code:

SageMaker models need to be packaged in .tar.gz format. We archive the TensorFlow SavedModel bundle and upload it to Amazon Simple Storage Service (Amazon S3):

We can add customized Python code to process input and output data via input_handler and output_handler methods. The customized Python code must be named inference.py and specified through the entry_point parameter. We add preprocessing to accept an image byte stream as input and read and transform the byte stream with tensorflow.keras.preprocessing:

After we have the S3 model artifact path, we can use the following code to deploy a SageMaker endpoint:

Calling deploy starts the process of creating a SageMaker endpoint. This process includes the following steps:

Starts initial_instance_countAmazon Elastic Compute Cloud (Amazon EC2) instances of the type instance_type.

On each instance, SageMaker does the following:

Starts a Docker container optimized for TensorFlow Serving (see SageMaker TensorFlow Serving containers).

Starts a TensorFlow Serving process configured to run your model.

Starts an HTTP server that provides access to TensorFlow Server through the SageMaker InvokeEndpoint

REST communication with TensorFlow Serving

We have complete control over the inference request by implementing the handler method in the entry point inference script. The Python service creates a context object. We convert the preprocessed image NumPy array to JSON and retrieve the REST URI from the context object to trigger a TFS invocation via REST.

gRPC communication with TensorFlow Serving

Alternatively, we can use gRPC for in-server communication with TFS via the handler method. We import the gRPC libraries, retrieve the gRPC port from the context object, and trigger a TFS invocation via gRPC:

Prediction invocation comparison

We can invoke the deployed model with an input image to retrieve image classification or object detection outputs:

We then trigger 100 invocations to generate latency statistics for comparison:

The following table summarizes our results from the invocation tests. The results show a 75% improvement in latency with gRPC compared to REST calls to TFS for image classification, and 85% improvement for object detection models. We observe that the performance improvement depends on the size of the request payload and response payload from the model.

Use case

Model

Input image size

Request payload size

Response payload size

Average invocation latency via REST

Average invocation latency via gRPC

Performance gain via gRPC

Image classification

MobileNetv2

20 kb

600 kb

15 kb

266 ms

58 ms

75%

Object detection

EfficientDetD1

100 kb

1 mb

110 mb

4057 ms

468 ms

85%

Conclusion

In this post, we demonstrated how to reduce model serving latency for TensorFlow computer vision models on SageMaker via in-server gRPC communication. We walked through a step-by-step process of in-server communication with TensorFlow Serving via REST and gRPC and compared the performance using two different models and payload sizes. For more information, see Maximize TensorFlow performance on Amazon SageMaker endpoints for real-time inference to understand the throughput and latency gains you can achieve from tuning endpoint configuration parameters such as the number of threads and workers.

SageMaker provides a powerful and configurable platform for hosting real-time computer vision inference in the cloud with low latency. In addition to using gRPC, we suggest other techniques to further reduce latency and improve throughput, such as model compilation, model server tuning, and hardware and software acceleration technologies. Amazon SageMaker Neo lets you compile and optimize ML models for various ML frameworks to a wide variety of target hardware. Select the most appropriate SageMaker compute instance for your specific use case, including g4dn featuring NVIDIA T4 GPUs, a CPU instance type coupled with Amazon Elastic Inference, or inf1 featuring AWS Inferentia.

About the Authors

Hasan Poonawala is a Machine Learning Specialist Solutions Architect at AWS, based in London, UK. Hasan helps customers design and deploy machine learning applications in production on AWS. He is passionate about the use of machine learning to solve business problems across various industries. In his spare time, Hasan loves to explore nature outdoors and spend time with friends and family.

Mark Roy is a Principal Machine Learning Architect for AWS, helping customers design and build AI/ML solutions. Mark’s work covers a wide range of ML use cases, with a primary interest in computer vision, deep learning, and scaling ML across the enterprise. He has helped companies in many industries, including insurance, financial services, media and entertainment, healthcare, utilities, and manufacturing. Mark holds six AWS certifications, including the ML Specialty Certification. Prior to joining AWS, Mark was an architect, developer, and technology leader for over 25 years, including 19 years in financial services.

Read MoreAWS Machine Learning Blog