Amazon Aurora Serverless is an on-demand, auto scaling configuration for Aurora. It scales the capacity up or down based on your application’s needs. It enables you to run your database in the cloud without managing any database capacity. Aurora Serverless v2 supports Amazon Aurora Global Database , Multi AZ, AWS Identity and Access Management (IAM) database authentication, Amazon RDS Performance Insights, and Amazon RDS Proxy. Aurora Serverless v2 is available for the MySQL 8.0- and PostgreSQL 13+ compatible editions of Amazon Aurora.

In the post Scaling your Amazon RDS instance vertically and horizontally, we saw how to scale the non-serverless RDMS. In this post, we discuss use cases and best practices for using the scaling features of Aurora Serverless. There are two versions of Aurora Serverless: v1 and v2. We will focus on v2 for this post, which was released in April 2022.

Overview

Aurora Serverless v2 is suitable when you have a variable, unpredictable, spiky, demanding or multi-tenant application workload and it’s not feasible to manually manage the database capacity. It supports all manners of workloads including the most demanding, business-critical environments that require high scale and high availability.

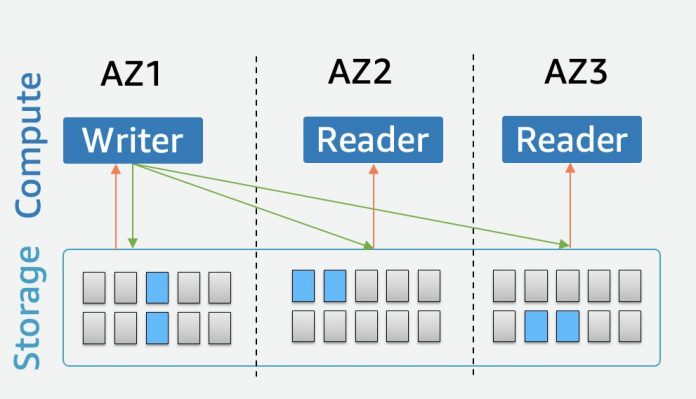

Aurora Serverless v2 is built on top of the same architecture of Aurora provisioned cluster with a decoupled storage and compute layer. The purpose-built storage layer is distributed across three availability zones, maintains six copies of your data, and is distributed across hundreds to thousands of storage nodes depending on the size of your database. It allows the separation of storage and compute, which is crucial for enabling a serverless database.

Connect to an Aurora Serverless v2

Connecting to Aurora Serverless v2 cluster is very similar to how you connect your applications to an Aurora provisioned cluster. You usually connect an application to a cluster endpoint, which is a writer endpoint that connects to the primary instance of the cluster. You connect to the read replicas with a reader endpoint. The reader endpoint load-balances connections to available Aurora replicas in an Aurora DB cluster. Because you’re connecting to the endpoint, your session should be maintained.

To see the endpoints, select auroraserverless and on the Connectivity & security tab, you can see the endpoint details for the reader and writer instances.

You can use the custom endpoints to isolate ad-hoc read traffic and route read-only workloads to your read replica. This isolates the write workload to your writer node. You can update your custom endpoint with the number of instances needed to match your workload. To view your cluster endpoints, follow the steps in Amazon Aurora connection management.

Aurora Serverless v2 capacity and cost benefits

Your database load grows as the utilization of your application increases. Therefore, scaling your database helps your application remain responsive and avoid performance bottlenecks. Aurora Serverless continuously tracks the utilization of CPU, memory, and network utilization, so it will scale up once it detects capacity constraints. Conversely, when resources are low, the scaling operation adds resource capacity in the form on ACU to the reader or writer.

The database capacity for Aurora Serverless is measured in Aurora Capacity Units (ACUs). Each ACU is a combination of approximately 2 gigabytes (GiB) of memory, corresponding CPU, and networking. The smallest Aurora Serverless v2 capacity that you can set is 0.5 ACUs (1 GiB), and fine-grained scaling is done with as little as 0.5 ACU increments. With Aurora Serverless v2, you can stop your cluster similar to how you stop in an Aurora provisioned cluster to save on cost by not paying for DB instance hours.

Aurora Serverless v2 reduces the administrative effort for planning, resizing DB instances in a cluster simplifying consistent capacity management and reducing operational overhead.

Aurora Serverless v2 helps to optimize the cost because you don’t need to provision for peak capacity. Also, you’re charged only for the ACUs used in your Aurora DB cluster. Because you only pay for the database resources you consume, when the database is active on per-second basis, it’s cost-effective and can also have lower Total Cost of Ownership.

Monitoring Aurora Serverless v2 performance

As a best practice, you can monitor Aurora Serverless v2 performance using RDS Performance Insights. With the Performance Insights dashboard, you can visualize the database load on your Amazon Aurora cluster load and filter the load by waits, SQL statements, hosts, or users.

There are important Amazon CloudWatch metrics for Aurora Serverless v2 along with standard Aurora CloudWatch metrics. For example, you can view Aurora Serverless v2 DB instances in CloudWatch to monitor the capacity consumed by each DB instance with the ServerlessDatabaseCapacity (number of ACUs consumed) and ACUUtilization (percentage of ACUs consumed).

You can also determine the application data access pattern and flexibility in changing the application to determine the right path for scaling. It’s a good practice to always review the Amazon RDS recommendations and best practices for Aurora.

You can scale your database within region and across regions through Read replicas and Amazon Global Database respectively.

Read replicas

You can scale readers horizontally by adding up to 15 read replicas to your Aurora cluster. A read replica is a read-only copy of your database instance within the same AWS region or in a different region by creating a cross-region read replica. You can use a read replica to offload read requests or analytics traffic from the primary instance and scale read traffic by directing it to the read replica. This is suitable for read-intensive workloads like analytics or reporting.

You can also use read replicas to increase the availability of your database by promoting a read replica to a primary instance for faster recovery in the event of a disaster. There is a promotion tier setting for Aurora Serverless v2 DB instances which helps in determining whether the DB instance scales up to match the capacity of the writer DB instance or scales independently based on its own workload. Aurora Serverless v2 reader DB instances in tier 0 or 1 are kept at a minimum capacity at least as high as the writer DB instance which comes with a cost but this way, they are ready to take over from the writer DB instance in case of a failover. Aurora Serverless v2 reader DB instances in tiers 2–15 don’t have the same constraint on their minimum capacity.

To add a read replica, complete the following steps:

On the Amazon RDS console, choose Databases in the navigation pane.

Select your cluster and on the Actions menu, choose Add reader.

Enter your Source DB Cluster Identifier and the name of the new reader as the DB instance identifier. Choose Serverless v2 as the DB instance class and add the region. Then click on Add Reader.

It will take a few minutes and you will see auroraserverless-reader1 Reader Instance as Available.

As shown in the previous screenshot, you can add Aurora Serverless v2 reader to an existing Aurora cluster or choose a provisioned reader. So, for existing clusters, you can have a mixed configuration with a provisioned or serverless reader.

RDS Proxy

You can also use Amazon RDS Proxy to create an additional read-only endpoint to connect your application to read replicas. RDS Proxy is a fully managed, highly available database proxy for Amazon Relational Database Service (Amazon RDS) that makes applications more scalable, more resilient to database failures, and more secure. In addition, RDS Proxy sits between your application and your RDS database to help with pooling connections, reducing failover times.

You can enable RDS Proxy for most applications with no code changes, and you don’t need to provision or manage any additional infrastructure. However, you must point your application to the RDS Proxy endpoint instead of the DB endpoint.

RDS Proxy helps improve application availability during failure scenarios such as a database failover because it doesn’t rely on DNS propagation to perform failovers. RDS Proxy also eliminates reader and writer transition issues for Aurora cluster clients by actively monitoring each database instance in the Aurora database cluster to act quickly during failover on behalf of the clients.

To create RDS proxy, follow these steps:

Click on Proxies in RDS console and click on Create proxy on the right.

Create a name for Proxy under proxy identifier. Under Target group configuration, choose auroraserverless as your database and choose the Secrets Manager secrets if existing or create new. Create new IAM role, choose the subnets, scroll through the page and click on Create Proxy.

It will take a few minutes and you will see proxy auroraserverlessproxy as Available.

Aurora Global Database

You can also scale reads across regions by using Aurora Global Database which is also supported with Aurora Serverless v2. Aurora Global Database is designed for globally distributed applications by bringing reads closer to the database, allowing a single Aurora database to span multiple regions. You can use the Aurora Global Databases for scaling reads as well as cross-region disaster recovery. You can use Aurora Serverless v2 along with Global Databases to create additional read-only copies of your cluster in other regions for disaster recovery purposes and spread your Aurora Serverless v2 read workload across multiple regions.

To create Aurora Global database, refer to Getting started with Amazon Aurora global databases.

In the Amazon RDS navigation pane, select the cluster and on the Actions menu, choose Add AWS Region.

Enter the details for DB instance identifier and region. Under Instance configuration, Choose Serverless and your VPC under Connectivity.

You can check the box to turn on global write forwarding if you want writes enabled in the secondary region as well.

When you turn this setting on, you can connect to a DB cluster in a secondary AWS Region and perform limited write operations. Aurora forwards the write requests to the DB cluster in the primary AWS Region. The primary cluster performs the write operations. Then Aurora replicates the results to all of the secondary clusters. Thus, although the secondary cluster is technically read-only, you can still use its endpoint with read-write applications. Expand Additional Configuration and set DB instance identifier and DB cluster identifier. Scroll further and click on Add region to create the global cluster.

The global cluster, including the secondary DB cluster is created and can take up to 30 minutes to provision.

Navigate to the secondary AWS region and you will see Aurora Global database cluster and secondary cluster in the secondary region as well.

The secondary DB cluster only has a Reader Endpoint provisioned and not the Cluster Endpoint as secondary db clusters only contain readers. You can see that the writer instance is inactive,

In Amazon Aurora Global database, when the user is not using the secondary database actively, your database will scale down to minimum database capacity. This way, it is cost effective and you will pay for that minimum database capacity only. When it fails over, it scales up to scale the workload.

In the event of failover, navigate to the secondary region, remove the secondary aurora serverless cluster from the global database, which will stop the replication of primary cluster. Then you can promote the secondary cluster to become primary.

Conclusion

In summary, you can scale Aurora Serverless up or out to meet the growing needs of your applications. In this post, we showed you the ideal use case for Amazon Aurora. First, we walked through how to connect to the Aurora Serverless v2. Next, we talk about different scaling mechanisms by adding a read replica and using the Aurora Global Database feature. Aurora Serverless takes care of the heavy lifting in scaling your database so you can focus more on your applications. We welcome your feedback. Please share your experience and any questions in the comments.

About the authors

Marie Yap is a Principal Solutions Architect for Amazon Web Services based in Hawaii. In this role, she helps various organizations begin their journey to the cloud. She also specializes in analytics and modern data architectures.

Neha Gupta is a Principal Solutions Architect based out of SoCAL. In her role, she accelerates customer the migration to AWS. She specializes in databases and comes with 20+ years of db experience. Apart from work, she’s outdoorsy and loves to dance.

Read MoreAWS Database Blog