Data science workflows have to pass multiple stages as they progress from the experimentation to production pipeline. A common approach involves separate accounts dedicated to different phases of the AI/ML workflow (experimentation, development, and production).

In addition, issues related to data access control may also mandate that workflows for different AI/ML applications be hosted on separate, isolated AWS accounts. Managing these stages and multiple accounts is complex and challenging.

When it comes to model deployment, however, it often makes sense to have a central repository of approved models to keep track of what is being used for production-grade inference. The Amazon SageMaker Model Registry is the natural choice for this kind of inference-oriented metadata store. In this post, we showcase how to set up such a centralized repository.

Overview

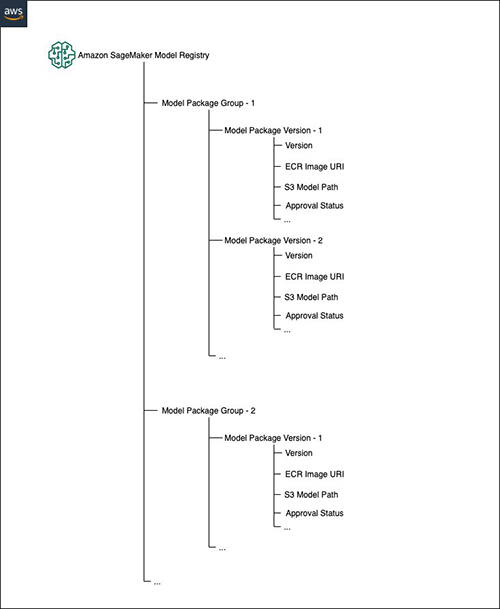

The workflow we address here is the one common to many data science projects. A data scientist in a dedicated data science account experiments on models, creates model artifacts on Amazon Simple Storage Service (Amazon S3), keeps track of the association between model artifacts and Amazon Elastic Container Registry (Amazon ECR) images using SageMaker model packages, and groups model versions into model package groups. The following diagram gives an overview of the structure of the SageMaker Model Registry.

A typical scenario has the following components:

One or more spoke environments are used for experimenting and for training ML models

Segregation between the spoke environments and a centralized environment is needed

We want to promote a machine learning (ML) model from the spokes to the centralized environment by creating a model package (version) in the centralized environment, and optionally moving the generated artifact model.tar.gz to an S3 bucket to serve as a centralized model store

Tracking and versioning of promoted ML models is done in the centralized environment from which, for example, deployment can be performed

This post illustrates how to build federated, hub-and-spoke model registries, where multiple spoke accounts use the SageMaker Model Registry from a hub account to register their model package groups and versions.

The following diagram illustrates two possible patterns: a push-based approach and a pull-based approach.

In the push-based approach, a user or role from a spoke account assumes a role in the central account. They then register the model packages or versions directly into the central registry. This is the simplest approach, both to set up and operate. However, you must give the spoke accounts write access (through the assumed role) to the central hub, which in some setups may not be possible or desirable.

In the pull-based approach, the spoke account registers model package groups or versions in the local SageMaker Model Registry. Amazon EventBridge notifies the hub account of the modification, which triggers a process that pulls the modification and replicates it to the hub’s registry. In this setup, spoke accounts don’t have any access to the central registry. Instead, the central account has read access to the spoke registries.

In the following sections, we illustrate example configurations for simple, two-account setups:

A data science (DS) account used for performing isolated experimentation using AWS services, such as SageMaker, the SageMaker Model Registry, Amazon S3, and Amazon ECR

A hub account used for storing the central model registry, and optionally also ML model binaries and Amazon ECR model images.

In real-life scenarios, multiple DS accounts would be associated to a single hub account.

Strictly connected to the operation of a model registry is the topic of model lineage, which is the possibility to trace a deployed model all the way back to the exact experiment and training job or data that generated it. Amazon SageMaker ML Lineage Tracking creates and stores information about the steps of an ML workflow (from data preparation to model deployment) in the accounts where the different steps are originally run. Exporting this information to different accounts is possible as of this writing using dedicated model metadata. Model metadata can be exchanged through different mechanisms (for example by emitting and forwarding a custom EventBridge event, or by writing to an Amazon DynamoDB table). A detailed description of these processes is beyond the scope of this post.

Access to model artifacts, Amazon ECR, and basic model registry permissions

Full cross-account operation of the model registry requires three main components:

Access from the hub account to model artifacts on Amazon S3 and to Amazon ECR images (either in the DS accounts or in a centralized Amazon S3 and Amazon ECR location)

Same-account operations on the model registry

Cross-account operations on the model registry

We can achieve the first component using resource policies. We provide examples of cross-account read-only policies for Amazon S3 and Amazon ECR in this section. In addition to these settings, the principals in the following policies must act using a role where the corresponding actions are allowed. For example, it’s not enough to have a resource policy that allows the DS account to read a bucket. The account must also do so from a role where Amazon S3 reads are allowed. This basic Amazon S3 and Amazon ECR configuration is not detailed here; links to the relevant documentation are provided at the end of this post.

Careful consideration must also be given to the location where model artifacts and Amazon ECR images are stored. If a central location is desired, it seems like a natural choice to let the hub account also serve as an artifact and image store. In this case, as part of the promotion process, model artifacts and Amazon ECR images must be copied from the DS accounts to the hub account. This is a normal copy operation, and can be done using both push-to-hub and pull-from-DS patterns, which aren’t detailed in this post. However, the attached code for the push-based pattern shows a complete example, including the code to handle the Amazon S3 copy of the artifacts. The example assumes that such a central store exists, that it coincides with the hub account, and that the necessary copy operations are in place.

In this context, versioning (of model images and of model artifacts) is also an important building block. It is required to improve the security profile of the setup and make sure that no accidental overwriting or deletion occurs. In real-life scenarios, the operation of the setups described here is fully automated, and steered by CI/CD pipelines that use unique build-ids to generate unique identifiers for all archived resources (unique object keys for Amazon S3, unique image tags for Amazon ECR). An additional level of robustness can be added by activating versioning on the relevant S3 buckets, as detailed in the resources provided at the end of this post.

Amazon S3 bucket policy

The following resource policy allows the DS account to get objects inside a defined S3 bucket in the hub account. As already mentioned, in this scenario, the hub account also serves as a model store, keeping a copy of the model artifacts. The case where the model store is disjointed from the hub account would have a similar configuration: the relevant bucket must allow read operations from the hub and DS accounts.

Amazon ECR repository policy

The following resource policy allows the DS account to get images from a defined Amazon ECR repository in the hub account, because in this example the hub account also serves as the central Amazon ECR registry. In case a separate central registry is desired, the configuration is similar: the hub or DS account needs to be given read access to the central registry. Optionally, you can also restrict the access to specific resources, such as enforce a specific pattern for tagging cross-account images.

IAM policy for SageMaker Model Registry

Operations on the model registry within an account are regulated by normal AWS Identity and Access Management (IAM) policies. The following example allows basic actions on the model registry:

We now detail how to configure cross-account operations on the model registry.

SageMaker Model Registry configuration: Push-based approach

The following diagram shows the architecture of the push-based approach.

In this approach, users in the DS account can read from the hub account, thanks to resource-based policies. However, to gain write access to central registry, the DS account must assume a role in the hub account with the appropriate permissions.

The minimal setup of this architecture requires the following:

Read access to the model artifacts on Amazon S3 and to the Amazon ECR images, using resource-based policies, as outlined in the previous section.

IAM policies in the hub account allowing it to write the objects into the chosen S3 bucket and create model packages into the SageMaker model package groups.

An IAM role in the hub account with the previous policies attached with a cross-account AssumeRole rule. The DS account assumes this role to write the model.tar.gz in the S3 bucket and create a model package. For example, this operation could be carried out by an AWS Lambda function.

A second IAM role, in the DS account, that can read the model.tar.gz artifact from the S3 bucket, and assume the role in the hub account mentioned above. This role is used for reads from the registry. For example, this could be used as the run role of a Lambda function.

Create a resource policy for model package groups

The following is an example policy to be attached to model package groups in the hub account. It allows read operations on a package group and on all package versions it contains.

You can’t associate this policy with the package group via the AWS Management Console. You need SDK or AWS Command Line Interface (AWS CLI) access. For example, the following code uses Python and Boto3:

Cross-account policy for the DS account to the Hub account

This policy allows the users and services in the DS account to assume the relevant role in the hub account. For example, the following policy allows a Lambda execution role in the DS account to assume the role in the hub account:

Example workflow

Now that all permissions are configured, we can illustrate the workflow using a Lambda function that assumes the hub account role previously defined, copies the artifact model.tar.gz created into the hub account S3 bucket, and creates the model package linked to the previously copied artifact.

In the following code snippets, we illustrate how to create a model package in the target account after assuming the relevant role. The complete code needed for operation (including manipulation of Amazon S3 and Amazon ECR assets) is attached to this post.

Copy the artifact

To maintain a centralized approach in the hub account, the first operation described is copying the artifact in the centralized S3 bucket.

The method requires as input the DS source bucket name, the hub target bucket name, and the path to the model.tar.gz. After you copy the artifact into the target bucket, it returns the new Amazon S3 path that is used from the model package. As discussed earlier, you need to run this code from a role that has read (write) access to the source (destination) Amazon S3 location. You set this up, for example, in the execution role of a Lambda function, whose details are beyond the scope of this document. See the following code:

Create a model package

This method registers the model version in a model package group that you already created in the hub account. The method requires as input a Boto3 SageMaker client instantiated after assuming the role in the hub account, the Amazon ECR image URI to use in the model package, the model URL created after copying the artifact in the target S3 bucket, the model package group name used for creating the new model package version, and the approval status to be assigned to the new version created:

A Lambda handler orchestrates all the actions needed to operate the central registry. The mandatory parameters in this example are as follows:

image_uri – The Amazon ECR image URI used in the model package

model_path – The source path of the artifact in the S3 bucket

model_package_group_name – The model package group name used for creating the new model package version

ds_bucket_name – The name of the source S3 bucket

hub_bucket_name – The name of the target S3 bucket

approval_status – The status to assign to the model package version

See the following code:

SageMaker Model Registry configuration: Pull-based approach

The following diagram illustrates the architecture for the pull-based approach.

This approach is better suited for cases where write access to the account hosting the central registry is restricted. The preceding diagram shows a minimal setup, with a hub and just one spoke.

A typical workflow is as follows:

A data scientist is working on a dedicated account. The local model registry is used to keep track of model packages and deployment.

Each time a model package is created, an event “SageMaker Model Package State Change” is emitted.

The EventBridge rule in the DS account forwards the event to the hub account, where it triggers actions. In this example, a Lambda function with cross-account read access to the DS model registry can retrieve the needed information and copy it to the central registry.

The minimal setup of this architecture requires the following:

Model package groups in the DS account need to have a resource policy, allowing read access from the Lambda execution role in the hub account.

The EventBridge rule in the DS account must be configured to forward relevant events to the hub account.

The hub account must allow the DS EventBridge rule to send events over.

Access to the S3 bucket storing the model artifacts, as well as to Amazon ECR for model images, must be granted to a role in the hub account. These configurations follow the lines of what we outlined in the first section, and are not further elaborated on here.

If the hub account is also in charge of deployment in addition to simple bookkeeping, read access to the model artifacts on Amazon S3 and to the model images on Amazon ECR must also be set up. This can be done by either archiving resources to the hub account or with read-only cross-account access, as already outlined earlier in this post.

Create a resource policy for model package groups

The following is an example policy to attach to model package groups in the DS account. It allows read operations on a package group and on all package versions it contains:

You can’t associate this policy to the package group via the console. The SDK or AWS CLI is required. For example, the following code uses Python and Boto3:

Configure an EventBridge rule in the DS account

In the DS account, you must configure a rule for EventBridge:

On the EventBridge console, choose Rules.

Choose the event bus you want to add the rule to (for example, the default bus).

Choose Create rule.

Select Event Pattern, and navigate your way to through the drop-down menus to choose Predefined pattern, AWS, SageMaker¸ and SageMaker Model Package State Change.

You can refine the event pattern as you like. For example, to forward only events related to approved models within a specific package group, use the following code:

In the Target section, choose Event Bus in another AWS account.

Enter the ARN of the event bus in the hub account that receives the events.

Finish creating the rule.

In the hub account, open the EventBridge console, choose the event bus that receives the events from the DS account, and edit the Permissions field so that it contains the following code:

Configure an EventBridge rule in the hub account

Now events can flow from the DS account to the hub account. You must configure the hub account to properly handle the events:

On the EventBridge console, choose Rules.

Choose Create rule.

Similarly to the previous section, create a rule for the relevant event type.

Connect it to the appropriate target—in this case, a Lambda function.

In the following example code, we process the event, extract the model package ARN, and retrieve its details. The event from EventBridge already contains all the information from the model package in the DS account. In principle, the resource policy for the model package group isn’t even needed when the copy operation is triggered by EventBridge.

Conclusion

SageMaker model registries are a native AWS tool to track model versions and lineage. The implementation overhead is minimal, in particular when compared with a fully custom metadata store, and they integrate with the rest of the tools within SageMaker. As we demonstrated in this post, even in complex multi-account setups with strict segregation between accounts, model registries are a viable solution to track operations of AI/ML workflows.

References

To learn more, refer to the following resources:

Amazon S3 versioning – See the following:

Adding objects to versioning-enabled buckets

Retrieving object versions from a versioning-enabled bucket

IAM role configuration for Amazon S3 read and write – User policy examples

IAM role configuration for Amazon ECR pull – Repository policy examples

IAM AssumeRole – Granting a user permissions to switch roles

SageMaker Model Registry – Register and Deploy Models with Model Registry

SageMaker ML lineage – Amazon SageMaker ML Lineage Tracking

Amazon EventBridge – See the following:

Creating Amazon EventBridge rules that react to events

Sending and receiving Amazon EventBridge events between AWS accounts

About the Authors

Andrea Di Simone is a Data Scientist in the Professional Services team based in Munich, Germany. He helps customers to develop their AI/ML products and workflows, leveraging AWS tools. He enjoys reading, classical music and hiking.

Bruno Pistone is a Machine Learning Engineer for AWS based in Milan. He works with enterprise customers on helping them to productionize Machine Learning solutions and to follow best practices using AWS AI/ML services. His field of expertise are Machine Learning Industrialization and MLOps. He enjoys spending time with his friends and exploring new places around Milan, as well as traveling to new destinations.

Matteo Calabrese is a Data and ML engineer in the Professional Services team based in Milan (Italy).

He works with large enterprises on AI/ML projects, helping them in proposition, deliver, scale, and optimize ML solutions . His goal is shorten their time to value and accelerate business outcomes by providing AWS best practices. In his spare time, he enjoys hiking and traveling.

Giuseppe Angelo Porcelli is a Principal Machine Learning Specialist Solutions Architect for Amazon Web Services. With several years software engineering an ML background, he works with customers of any size to deeply understand their business and technical needs and design AI and Machine Learning solutions that make the best use of the AWS Cloud and the Amazon Machine Learning stack. He has worked on projects in different domains, including MLOps, Computer Vision, NLP, and involving a broad set of AWS services. In his free time, Giuseppe enjoys playing football.

Read MoreAWS Machine Learning Blog