Amazon MemoryDB for Redis and Amazon ElastiCache for Redis are in-memory data stores. While ElastiCache is commonly used as a cache, MemoryDB is a durable database designed for applications with high performance requirements.

Customers love Redis as an in-memory data engine. As data used and accessed grows exponentially, making the most of the memory available becomes increasingly important. In this post, I provide multiple strategies with code snippets to help you reduce your application’s memory consumption when using MemoryDB and ElastiCache for Redis. This helps to optimize costs and allows you to fit more data within your instances in your existing cluster.

Before going into these optimizations, remember ElastiCache for Redis supports data tiering, which automatically places data across memory and local, high-performance solid state drives (SSD). Data tiering is ideal for applications that access up to 20% of their datasets regularly. ElastiCache for Redis provides a convenient way to scale your clusters at a lower cost to up to a petabyte of data. It can enable over 60% savings per GB of capacity while having minimal performance impact for workloads that access a subset of their data regularly. ElastiCache for Redis also supports auto scaling to automatically adjust your cluster horizontally by adding or removing shards or replica nodes.

Prerequisites

For this walkthrough, you need the following prerequisites:

An AWS account (you can use the AWS Free Tier)

An ElastiCache for Redis or MemoryDB cluster (a single instance is enough)

Your local machine or a remote environment such as AWS Cloud9 with connectivity to your cluster

The redis-cli client to connect remotely to your instance

Python 3.5 or newer with the following libraries

To run some of the examples in this post, you need the following Python libraries:

To know how much memory is used, redis-cli has the memory usage command:

To connect to our Redis cluster from Python, we use redis-py-cluster. In our examples, we ignore the creation of the RedisCluster object for simplicity. To check that you have connectivity to your Redis instance, you can use the following code:

If you’re performing multiple operations, consider using pipelines. Pipelines allow you to batch multiple operations and save multiple network trips for higher performance. See the following code:

To check the size of an item before inserting them in Redis, we use the following code:

To simulate more realistic data, we use the library Faker:

Basic optimizations

Before going to advanced optimizations, we apply the basic optimizations. These are easy manipulations so we don’t provide the code in this post.

In our example, we assume we have a big list of key-value pairs. As the keys, we use the IPs of hypothetical visitors to our website. As the values, we have a counter of how many visits, their reported name, and their recent actions:

Use the following code to insert these programmatically:

Reduce field names

Redis field names consume memory each time they’re used, so you can save space by keeping names as short as possible. In our previous example, instead of visits as the field name, we may want to use v. Similarly, we can use n instead of name, and r instead of recent_actions. We can also shorten the key name to i instead of IP.

Within the fields themselves, you can also reduce common words with symbols. We can switch recent actions to the initial character (v instead of visit).

The following code is how our previous example looks after this simplification:

This results in 23% memory savings in our specific example.

Use position to indicate the data type

If all fields exist, we can use a list instead of a hash, and the position tells us what the field name is. This allows us to remove the field names altogether. See the following code:

This results in an additional 14% memory savings in our case.

Serialize complex types

There are different ways to serialize complex objects, which allows you to store these in an efficient manner. Most languages have their own serialization libraries (pickle in Python, Serializable in Java, and so on). Some libraries work across languages and are often more space efficient, such as ProtoBuff or MsgPack.

The following code shows an example using MsgPack:

In this case, the original object is 73 bytes, whereas the serialized object is 49 bytes (33% space reduction).

To recover the value, MsgPack makes it very convenient, returning the Python object ready to use:

Redis-specific optimizations

To provide some of its functionalities like fast access and TTL, Redis may need to use additional space in memory besides the space the data itself needs. The next two sections help us reduce that additional overhead to a minimum. Then we show some probabilistic structures that can further help reduce the memory.

Move from strings or lists to hashes

In our initial example, we have many small strings stored as independent lists. Each entry on Redis is between 60 (without expire) or 100 bytes (with expire) of additional space, which is meaningful if we store several million items (100 million entries x 100 bytes = 10 GBs of overhead). In the first example, these are stored as a Redis list:

In the resulting optimization, all fields are stored in a single hash:

This allows us to save 90 bytes per entry. In the preceding example, given each entry is relatively small—representing 40% less memory usage.

Convert to smaller hashes (using ziplist)

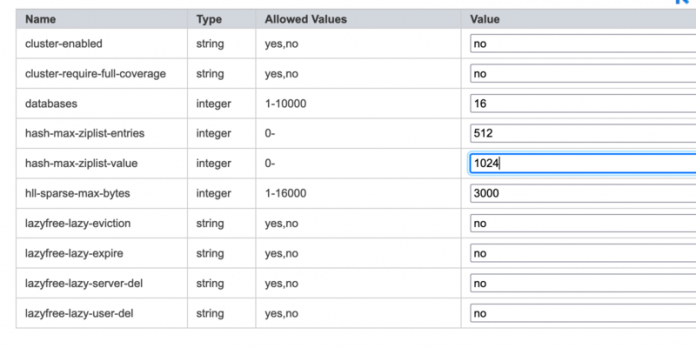

Hashes in Redis can be encoded in memory either as a hash table or ziplist (listpacks in Redis 7). The decision of which to use is based on two parameters in your parameter group:

hash-max-ziplist-value (by default 64)

hash-max-ziplist-entries (by default 512)

If any value for a key exceeds the two configurations, it’s stored automatically as a hash table instead of a ziplist. A hash table uses twice as much memory as a ziplist, but it can be faster for big hashes. The idea is to use a ziplist while keeping the number of items in each hash to a reasonable number.

To store items efficiently in ziplists, we migrate our single big hash into many similarly sized small hashes:

To achieve this space efficiency, we use the following code:

To read the values back, we use the following code:

The following screenshot shows an example of how to edit a parameter group on the Amazon ElastiCache console for Redis.

To make sure you’re actually using a ziplist, you can use the object encoding command:

Memory usage should also be about 40% less than if we stored as a hash list. If you don’t see the type as ziplist, check the two parameters and ensure both conditions are satisfied.

Use probabilistic structures

If you need to count the number of items in a set, but don’t need to be exact, a HyperLogLog is a natively supported probabilistic data structure for counting unique items in a set. The HyperLogLog algorithm is able to estimate cardinalities of over 109 with a typical accuracy (standard error) of 2%, using 1.5 kB of memory.

Bloom filters are also a highly space- and time-efficient probabilistic data structure. Although not natively supported, it can be implemented. Bloom filters are used to test whether an element is a member of a set with a small chance of false positives (but no false negatives). It allows you to store items in less than 10 bits for a 1% false positive probability.

If you need to simply check for equivalence with minimal chances of collision, you can store a hash of the content. For example, you can use a fast, non-cryptographic hash function such as xxHash:

There is no way to recover the original value (unless you stored it elsewhere), but we can use the hashed version to check for existence:

These techniques are very effective (often reducing the memory required by 99%) but have some trade-offs such as the possibility of false positives.

Data compression

One of the easiest ways to reduce memory consumption is to reduce the size of keys and values. This isn’t specific to Redis but applies particularly well to it.

In general, compression for Redis (and for semistructured and structured databases) is very different than file compression. The reason is because in Redis, we’re usually storing short fields.

Compression of long data

To compress long data, you can use popular compression algorithms such as Gzip, LZO, or Snappy. In this example, we use LZ4, which has a good balance between speed and compression ratio:

To recover the original value, we can simply pass the compressed bytes to the LZ4 library:

In this example, the compressed string is 20% smaller than the compressed one. Note that for small strings (more often stored in a database), this is unlikely to produce good results. If your data is very big, you may consider storing it in other places, such as Amazon Simple Storage Service (Amazon S3).

As mentioned earlier, for small strings (something more common in Redis), this doesn’t produce great results. We could try to compress groups of values (for example a shard of values), but it adds complexity to our code, so the next options are more likely to help us.

Custom base encoding

One option to reduce the space used is to make use of the limited range of characters of a particular data type. For example, our application may enforce that user names can only contain lowercase letters (a-z) and numbers (0–9). If this is the case, base36 encoding is more efficient than simply storing these as strings.

You can also create your own base depending on your own alphabet. In this example, we assume we’re recording all the moves of a player of the game Rock, Paper, Scissors. To encode it efficiently, we store each move as a single character string. In our example, we represent rock as 0, paper as 1, and scissors as 2. We can then compress it further by storing it as an integer that uses all potential values:

Although the original player_moves string would take 20 bytes, the compressed integer can be stored in just 4 bytes (75% space reduction). To decompress it is slightly more complex:

Using base-36 can provide around a 64% reduction in space used. There are some commonly used encodings and some easy-to-use libraries.

Compression of short strings with a domain dictionary

Most compression algorithms (such as Gzip, LZ4, Snappy) are not very effective to compress small strings (such a person name or a URL). This is because they learn about the data as they are compressing it.

An effective technique is to use a pre-trained dictionary. In the following example, we use Zstandard to train a dictionary that we use to compress and decompress a job name:

After you have the job_dictionary, compressing any random job is straightforward:

To decompress is also straightforward, but you need the original dictionary:

In this simple example, the original uncompressed job was 30 bytes, whereas the compressed job was 21 bytes (30% space savings).

Other encoding techniques for short fields

Short string compression is common for file compression, but you can use other techniques depending on the use case. You can get inspiration from Amazon Redshift compression encodings:

Byte-dictionary – If the cardinality of your data is small, you can encode an index for a symbol instead of the full string. An example of this is the country of a customer. Because there are a small number of countries, you can save a lot of space by storing only the index of the country.

Delta – Things like time of purchase might be stored using a full timestamp by the difference, which will be smaller.

Mostly – Mostly encodings are useful when the data type for a column is larger than most of the stored values require.

Run length – Run length encoding replaces a value that is repeated consecutively with a token that consists of the value and a count of the number of consecutive occurrences (the length of the run).

Text255 and text32k – Text255 and text32k encodings are useful for compressing string fields in which the same words recur often.

In general, short strings compression benefit from structural information constraints (for example, an IPv4 is composed of four numbers between 0–255), and distribution information (for example, many consumer emails have a free domain like gmail.com, yahoo.com, or hotmail.com).

Decision table

The following table presents a summary and the necessary requirements to apply each of the techniques.

Decision Criteria

Technique

Memory Savings for Our Example

Is up to 80% of your dataset accessed infrequently?

Automatic optimization features, data tiering

60%

Does your traffic demand change overtime?

Automatic optimization features, auto scaling

Depends on the node size

Are the same fields always present?

Use position to indicate the data type

14%

Are you storing complex types as binary data?

Serialization of complex types

33%

Do you have many small strings or lists?

Move from strings or lists to hashes

40%

Do you have many small strings or lists?

Move to smaller hashes

50%

Can you get by with a probabilistic approach?

Use probabilistic structures

99%

Are you storing long objects?

Use compression of long data

20%

Do you have short types with limited alphabets?

Use custom base encoding

75%

Do you have other short string types?

Use a pretrained dictionary

30%

Do you have other short strings that need compression?

Use other encoding techniques for short fields

30-80%

Conclusion

In this post, I showed you multiple ways to optimize the memory consumption of your Redis instances. This can have a positive effect on cost and performance. With any optimization, there are trade-offs between space and time used for reading and writing. You may lose some native Redis capabilities (such as eviction policies) depending on how you store the data. Redis also has a good memory optimization guide with some overlap with this post.

How do you efficiently store data in your Redis-compatible database? Let me know in the comments section.

About the Author

Roger Sindreu is a Solutions Architect with Amazon Web Services. He was a database engineer and has led engineering teams for the last 20 years. His interests are about AI/ML, Databases, FSI and everything related to AWS.

Read MoreAWS Database Blog