Amazon ElastiCache for Redis is an in-memory data store, delivering real-time, cost-optimized performance for modern applications. It is a fully managed service that scales to millions of operations per second with microsecond response time.

Redis is one of the most loved NoSQL key-value stores, and is known for its great performance. Our customers choose to run Redis with ElastiCache for caching to help reduce database costs and help improve performance. As they scale their applications, they have a growing need for high throughput with a high number of concurrent connections. In March 2019, we introduced enhanced I/O, which delivered up to an 83% increase in throughput and up to a 47% reduction in latency per node. In November 2021, we improved the throughput of TLS-enabled clusters by offloading encryption to non-Redis engine threads. In this post, we are excited to announce enhanced I/O multiplexing, which further improves throughput and latency, without requiring you to make additional changes to your applications.

Enhanced I/O multiplexing in ElastiCache for Redis 7

ElastiCache for Redis 7 and above now includes enhanced I/O multiplexing, which delivers significant improvements to throughput and latency at scale. Enhanced I/O multiplexing is ideal for throughput-bound workloads with multiple client connections, and its benefits scale with the level of workload concurrency. As an example, when using an r6g.xlarge node and running 5,200 concurrent clients, you can achieve up to 72% increased throughput (read and write operations per second) and up to 71% decreased P99 latency, compared with ElastiCache for Redis 6. For these types of workloads, Redis I/O processing can become a limiting factor in the ability to scale. With enhanced I/O multiplexing, each dedicated network I/O thread pipelines commands from multiple clients into the Redis engine, taking advantage of Redis’s ability to efficiently process commands in batches. Enhanced I/O multiplexing is automatically available when using Redis 7, in all AWS Regions, and at no additional cost. See the ElastiCache user guide for more information. Once you’re using ElastiCache for Redis version 7, no application or service configuration changes are required to use enhanced I/O multiplexing.

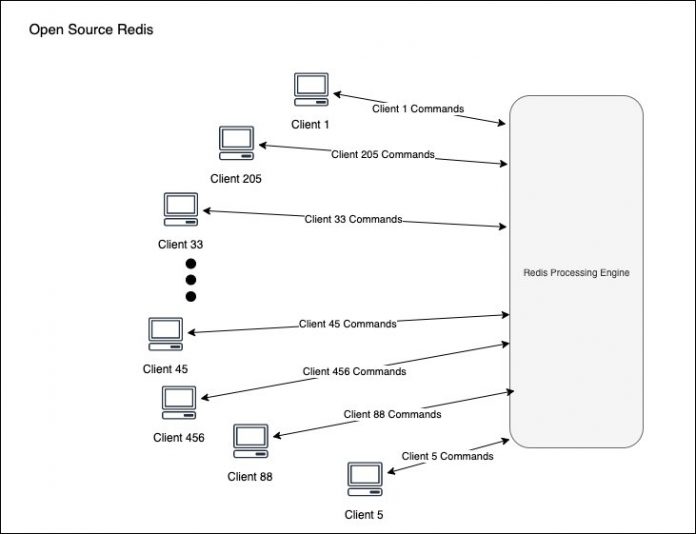

Let’s drill down a bit more and examine how it works. The following diagram shows a high-level view of how open-source Redis handles client I/O, up to Redis 6.

The Redis engine serves multiple clients simultaneously. The engine is single threaded, and the processing power is shared between connected clients. For each client, the engine handles the connection, parsing the request and running the commands. In this case, available CPUs are not utilized.

The next diagram shows ElastiCache for Redis with enhanced I/O (released for Redis 5.0.3), where available CPUs are used for offloading the client connections, on behalf of the Redis engine. A similar approach was taken in open-source Redis 6, which introduced a similar concept called I/O threads.

With enhanced I/O, the engine processing power is focused on running commands and not handling network connections. As a result, you can get up to an 83% increase in throughput and up to a 47% reduction in latency per node. With multiplexing, instead of opening a channel for each client (as can be seen in the preceding diagram), each enhanced I/O thread combines the commands together in a single channel with Redis engine, as shown in the following diagram.

In the preceding figure, multiple clients are handled by each enhanced I/O multiplexing thread, which in turn uses a single channel to the Redis main engine thread. The enhanced I/O thread collects and multiplexes client commands into a single batch that is forwarded to the Redis engine for processing. This technique allows for running commands more efficiently, resulting in improved speed, as we demonstrate in the next section.

Performance analysis

In this section, we present benchmark results for the memory-optimized R5 (x86) and R6g (ARM-Graviton2) nodes. We chose a typical caching scenario that included 20% Redis SET (write) and 80% Redis GET (read) commands, with a dataset initialized with 3 million 16B keys with 512B string values. Both ElastiCache and the Redis clients were running in the same Availability Zone.

Throughput gain

In the following chart, we share results from benchmark runs exercising a range of client concurrency (400 clients, 2,000 clients, and 5,200 clients) against different node sizes. The graph captures the percentage improvement in throughput (requests per second) that enhanced I/O multiplexing delivers.

With up to 72% gain in requests per second, enhanced I/O multiplexing introduces throughput improvement across the board, as can be seen in the preceding graph. It’s important to note that the more client load on the system there is, the higher the improvement is. For example, r6g.xlarge shows 26%, 48% and 72% ops/sec gain with 400, 2,000 and 5,200 concurrent clients, respectively. The following table lists the raw numbers demonstrating the improvement.

.

400 Clients

2,000 Clients

5,200 Clients

Redis 6.2.6 (ops/sec)

313,143

235,562

202,770

Redis 7.05 (ops/sec)

395,819

348,550

349,239

Improvement

26%

48%

72%

The multiplexing technique improves Redis processing efficiency, similar to the Redis pipeline feature. For both Redis pipelines and enhanced I/O multiplexing, the Redis engine processes commands in batch. However, while Redis pipeline batch includes commands of a single client, enhanced I/O multiplexing includes commands from multiple clients in a single batch.

Client-side latency reduction

Maxing out throughput is not recommended and results in CPU utilization spikes and response time slowdown. To avoid that, we tested latency at 142,000 requests per second, which was 70% throughput utilization of ElastiCache for Redis 6.2.6 on r6g.xlarge. We measured client-side latency with Redis 6 and compared it with the same load on Redis 7. The following table shows the results of a test running on r6g.xlarge. It captures the percentage of P50 and P99 latency reduction.

.

P50 Latency

P99 Latency

Redis 6.2.6 (ms)

0.55

8.3

Redis 7.05 (ms)

0.44

2.45

Latency Reduction

20%

71%

Similar to the throughput gain, the client-side latency reduction can be seen on the ElastiCache instances that support enhanced I/O multiplexing.

When ElastiCache serves more concurrent clients, enhanced I/O multiplexing provides lower latency. Why is that?

Enhanced I/O multiplexing combines commands originating from a multitude of clients, sometimes in the thousands, into a small number of channels, which is equivalent to the number of enhanced I/O threads. This reduction in the number of channels increases access locality and consequently improves memory cache usage. For example, instead of having a read/write buffer for each client, all clients share the same channel buffer. Costly memory cache misses are reduced and the time spent on a single command is shortened. The result is faster command handling, which lowers latency and increases throughput.

Conclusion

With enhanced I/O multiplexing, you will benefit from increased throughput and reduced latency, without changing your applications. Enhanced I/O multiplexing is automatically available when using Redis 7, in all AWS Regions, at no additional cost.

For more information, see Supported node types. To get started, create a new cluster, or upgrade an existing cluster using the ElastiCache console.

Stay tuned for more performance improvements on ElastiCache for Redis in the future.

Happy caching!

About the author

Mickey Hoter is a principal product manager on the Amazon ElastiCache team. He has 20+ years of experience in building software products – as a developer, team lead, group manager and product manager. Prior to joining AWS, Mickey worked for large companies such as SAP, Informatica and startups like Zend and ClickTale. Off work, he spends most of his time in the nature, where most of his hobbies are.

Read MoreAWS Database Blog