Amazon Aurora PostgreSQL-Compatible Edition is a fully managed PostgreSQL-compatible database engine running in AWS and is a drop-in replacement for PostgreSQL. Aurora PostgreSQL is cost-effective to set up, operate, and scale, and can be deployed for new or existing applications. Informix is a relational database management system from IBM and supports OLTP and other workloads.

In this post, we discuss how to migrate from an Informix database to Aurora PostgreSQL using an extract, transform, and load (ETL) approach. To facilitate migration of databases to Amazon Aurora, AWS provides the AWS Schema Conversion Tool (AWS SCT) and AWS Database Migration Service (AWS DMS). However, as of this writing, Informix is currently not a supported source database. Therefore, we explain in this post how to migrate from Informix to Aurora using an alternate approach using AWS Glue with CData Connect Cloud.

Reasons to migrate from Informix to Aurora

For an Informix database administrator, there are many reasons that you migrate to Aurora PostgreSQL:

Cost savings – It offers pay-as-you-go pricing, which means that you only pay for the resources you use. Also, you don’t pay anything for the database licensing.

Scalability – It allows you to easily scale your database up or down as needed without any downtime. Read replicas provide the ability to handle increased traffic and distribute workloads for database reads.

High availability and disaster recovery – It provides high availability and fault tolerance. It also provides built-in automatic failover and replication. This allows your application to continue to operate without interruption even if one or more instances fail.

Security – It offers several security features, including network isolation, encryption at rest and in transit, and authentication and authorization mechanisms.

Overall, migrating to Aurora PostgreSQL can provide your business with increased performance, scalability, high availability, security, and cost savings.

Solution overview

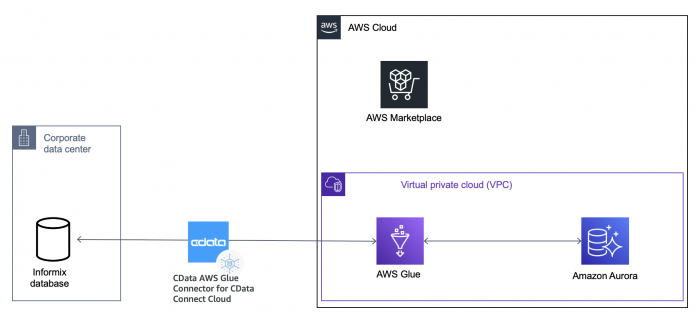

The following diagram shows how you can use CData Connect Cloud as an Informix source connection from within AWS Glue to migrate data to Aurora.

To demonstrate the migration experience, we sign up for CData Connect Cloud to connect AWS Glue to the Informix source database on premises or within AWS. Then we spin up an Aurora cluster acting as the target database environment. For instructions to launch your Aurora PostgreSQL cluster, refer to Creating an Amazon Aurora DB cluster. The solution is illustrated in the following architecture diagram.

AWS Glue is a serverless data integration service that makes it easy for analytics users to discover, prepare, move, and integrate data from multiple sources. You can use it for analytics, machine learning, and application development. It also includes additional productivity and DataOps tooling for authoring, running jobs, and implementing business workflows.

CData Connect Cloud is a consolidated connectivity platform that lets you connect any application with data from over 250 enterprise data sources, on premises or in the cloud. CData’s AWS Glue Connector for CData Connect Cloud simplifies the process of connecting AWS Glue jobs to extract and load data from more than 100 software as a service (SaaS), big data, and NoSQL data sources. You can connect to the Informix source from within AWS Glue by using CData Connect Cloud and sign up for a free trial.

Planning and assessment of a migration from Informix to PostgreSQL

Planning and assessing a migration from Informix to a PostgreSQL database involves several key steps, including the following:

Analyze the database objects of the Informix database, which is critical in understanding the complexity of the migration. This includes analyzing its tables, columns, schema, data types, indexes, stored procedures, functions, and triggers. Without the right database migration tools and depending on the complexity of the database objects, this can involve extensive development effort. An assessment of the database objects is critical for designing the new PostgreSQL database and ensuring that data is migrated accurately.

Perform an impact analysis to understand all technical and human dependencies to the Informix database and a risk mitigation strategy that involves rollback in the event of a critical error that stalls the database migration project.

Train the existing technical team on the differences between Informix and PostgreSQL. This makes sure that they’re able to develop and troubleshoot during and after the database migration project.

Understand the scope of the migration. There may be some database objects that are no longer being utilized and therefore can be excluded from the migration scope. This also helps in finalizing the development and testing effort involved during the database migration project.

Create the database objects in the target Aurora PostgreSQL database. This involves defining the schema, data types, and indexes by writing data definition language (DDL) statements.

Perform development and testing to convert or even rewrite the stored procedures, triggers, and functions for PostgreSQL compatibility.

Choose your data migration platform. In this post, we use AWS Glue.

Migrate the data using the ETL platform, which involves the initial load and subsequent change data capture (CDC) to ensure both databases are in sync until the cutover is complete.

Test the migrated data to ensure data accuracy and performance SLA. You can find more information on performance tuning of your Aurora cluster in Essential concepts for Aurora PostgreSQL tuning.

In summary, for a database migration project, planning and assessing a migration from Informix to Aurora PostgreSQL requires careful analysis and planning to ensure a smooth transition and minimal disruption to business operations. In this post, we are primarily focusing on the data migration process.

Schema and code conversion from Informix to PostgreSQL

Converting the database schema from Informix to PostgreSQL is the first step before we migrate any data. The database schema can either be converted manually or using any automated tools in the market. Similarly, converting the SQL code that is in the form of stored procedures, functions, and triggers requires manual effort or an automated tool. Although the focus of this post is not to discuss schema and code conversion, we cover the basics of the data types in the next section.

Differences in data types and syntax between Informix and PostgreSQL

Before we develop the migration ETL job, it’s important to understand the differences in data types between Informix and PostgreSQL. Depending on your specific database tables, there are several differences to consider, as shown in the following table. Refer to the PostgreSQL documentation for a full list of data types in PostgreSQL.

Informix Data Types

PostgreSQL Data Types (since 8.4)

CHAR(n)

CHAR(n)

INT / INTEGER

INT4

NUMERIC / DEC / DECIMAL(p,s)

DECIMAL(p,s)

DATE

DATE

VARCHAR(n[,m])

VARCHAR(n)

NCHAR(n)

CHAR (n)

NVARCHAR(n[,m])

Varchar (n)

SMALLINT

INT2

DOUBLE PRECISION / FLOAT[(n)]

FLOAT4

REAL / SMALLFLOAT

FLOAT8

MONEY

DECIMAL(16,2)

DATETIME q1 TO q2 (other than above)

TIMESTAMP(p) WITHOUT TIME ZONE

As with any heterogeneous database migration, it’s also critical to understand the differences in syntax used in the source and target database. The following is a sample illustration highlighting some of the differences in DDL statements for the same table between Informix and PostgreSQL.

The following code is what a DDL statement looks like in Informix:

Compare that to the following DDL statement for a table with the same structure in PostgreSQL:

In the previous example, the difference in the DDL script is minor. However, based on the data types you are using in your source Informix table, more differences in the DDL script are possible.

Migrating stored procedures using this approach requires manual effort, so understanding the differences between PostgreSQL and Informix is important. For illustration, the following example shows the difference between both database management systems for a sample stored procedure that removed duplicate transactions.

The following is the Informix code:

Compare to the following PostgreSQL code:

As you can see, unlike data types and DDL, there is a significant difference in the way stored procedures are written within PostgreSQL compared to Informix, so an assessment of development effort required to convert stored procedures is critical.

After the target database objects, which include tables, stored procedures, views, and indexes, are created within Aurora, the next step is to create the migration ETL job in AWS Glue.

Developing a migration ETL job using AWS Glue

The AWS Glue connector for CData Connect Cloud enables AWS Glue jobs to connect to a variety of data sources, including Informix database tables.

We now show you how to create an ELT job in AWS Glue, which will use the CData connector to connect to the Informix database, and how to migrate the data from Informix to PostgreSQL tables.

On the AWS Glue console, create the connection to the Informix database using CData Connect Cloud. For instructions, refer to Access Live IBM Informix Data in AWS Glue Studio. After following those instructions, you should see your Informix connection in AWS Glue Studio.

Now you can create your ETL migration job within AWS Glue. For specific steps on how to build your ETL job by connecting to your Aurora target database and defining the source-to-target mappings for each table, refer to AWS Glue Documentation.

AWS Glue Studio provides a set of built-in transforms that you can use to process your data. Your data passes from one node in the job diagram to another in a data structure called a DynamicFrame, which is an extension to an Apache Spark SQL DataFrame. Between the data source and data target nodes is the Transform – ApplyMapping node. You can configure this transform node to modify your data, or you can use additional transforms.

ETL testing to ensure data accuracy

After you run your AWS Glue job and migrate your data over to Aurora, you have to conduct ETL testing to ensure that the data matches between the source and the target. There are several approaches and tools that you can use to validate the data, but this is out of scope for this post. One way is for the PySpark code within the AWS Glue job to compare both source and target data frames to inspect any differences. Your PySpark code can also compare row counts, distinct values, and check for NULL values between both data frames as part of your data validation testing. Another way is to use an AWS Glue notebook for interactive validation.

Clean up

Stop any AWS Glue jobs that are running and adhere to the CData Cloud Connect free trial license agreement. Delete the Aurora cluster to ensure costs don’t continue to accrue. If you want to continue using this test environment, it’s recommended to create a billing alarm to ensure you stay within your budget for a proof of concept migration from Informix to Aurora PostgreSQL.

Conclusion

In this post, we provided an overview of migrating from an Informix database to Aurora PostgreSQL. In addition, we highlighted some of the differences between Informix and PostgreSQL so you can assess the complexity of your specific migration. We also discussed the ETL approach to migration using AWS Glue by using CData Connect Cloud for Informix.

When migrating from Informix to Aurora, ensure that your assessment takes into account the differences between both database management systems and how they will be addressed. Also, you can conduct a proof of concept to determine the best approach to migrating from Informix to Aurora. AWS Marketplace offers several AWS Partner products and services to choose from to help you complete your migration. You can also subscribe to CData Connect Cloud from there.

About the Authors

Muz Syed is a Senior Solutions Architect, Partners with AWS. His specialty is data analytics, and he holds 13 AWS Certifications and a master’s in Predictive Analytics. Muz has over 26 years of technology experience with deep consulting, solution architecture, and technology leadership, and is very passionate about building data-driven organizations to fuel business innovation and growth. In his spare time, Muz likes to travel and volunteer in local community activities.

Siva Thang is a Senior Solutions Architect, Partners with AWS. His specialty is in databases and analytics and he also holds a master’s degree in Engineering. Siva is deeply passionate about helping customers build a modern data platform in the cloud that includes migrating to modern relational databases and building data lakes and data pipelines at scale for analytics and machine learning. Siva also likes to present in various conferences and summits on the topic of modern databases and analytics.

Nirav Shah is a Principal Solutions Architect, Partners with AWS, based in sunny California. He specializes in AI/ML and containers and guides AWS customers to build highly secure, scalable, reliable, and cost-efficient applications in the cloud. He brings to his role over 18 years of technology experience in software development and architecture, data governance, engineering, and IT management. Outside of work, Nirav enjoys taking photographs and adventuring to different places.

Read MoreAWS Database Blog