In this article, you will learn how to build the Workato On-prem Agent (OPA) into a Docker container and deploy it as a microservice into a Kubernetes cluster.

We’ll cover steps for Amazon EKS and Microsoft AKS, but most are applicable to deploying to any Kubernetes environment including Google Cloud GKE or IBM OpenShift.

This article assumes you already have both a working Kubernetes cluster or Docker image registry available and you can successfully push and deploy a hello world app to/from your registry to your Kubernetes cluster.

Process of deploying Workato OPA as a microservice on Kubernetes

The overall process includes three major steps:

Build the Docker image

Push the Docker image to a registry

Deploy the Docker image from the registry to Kubernetes

We will use a payroll management system, as an example, that lacks suitable APIs and is running behind a firewall without access to the Internet. We will deploy OPA to access this app’s database directly. The same approach could be used to inject Workato into a protected microservices architecture that doesn’t expose its services to the Internet.

It is a good idea to test our OPA Docker image and the recipes that use it in a sandbox before deploying them to a production environment. For example, your cluster may have two namespaces: staging and prod. The commands used to deploy your image to these namespaces will be similar. However, to keep this article more concise, we will only deploy to one namespace.

Consider fault tolerance when deploying microservice based Workato OPA on Kubernetes

It’s also a good idea to consider fault tolerance when deploying OPA. Fault tolerance can be accomplished in different ways.

Workato fault tolerance

The traditional Workato approach to fault tolerance is to use an On-prem Group with two or more agents. Workato will load balance between available agents in the group, so if one becomes unavailable then load will be balanced to the others.

Kubernetes fault tolerance

However, Kubernetes has its own approach to fault tolerance. Kubernetes can monitor pod health, and can restart pods if necessary.

In some cases, Kubernetes fault tolerance may be enough, and this article will demonstrate deploying a single agent to a Kubernetes cluster (though we will show an on-prem group with multiple agents to demonstrate migrating an agent from a virtual server to a Kubernetes cluster).

There may be other reasons for deploying multiple agents to an on-prem group, multiple agents to a Kubernetes cluster, across clusters or across other environments, but this article won’t consider those.

When deploying multiple agents, keep in mind that every agent instance should have a unique key pair to identify itself when connecting to Workato. When two OPA agents use the same key pair, only one will be able to connect at a time.

Before we start

To follow along while reading this article, you will need to get access to Amazon EKS or Microsoft AKS cluster; or you can try using a different Kubernetes distribution like Google GKE, IBM OpenShift or even Docker Kubernetes running on your laptop.

As mentioned above, this article does not cover setting up a working Kubernetes cluster or Docker image registry. It assumes you already have both available and you can successfully push and deploy a hello world app to/from your registry to your Kubernetes cluster.

At the end, we will use this Employees sample MySQL database to test our agents. It contains information about departments, employees and their salaries.

Before starting, you will need to install:

awscli(if using AWS EKS),

az (if using Azure AKS)

docker and kubectl tools

You’ll also need to be able to login to AWS, Azure and Docker from the command line.

Step-by-step: How to deploy Workato OPA as a microservice on Kubernetes

Step 1. Build the Docker image

Our docker image should contain at least three major components:

the OPA agent

its configuration file

its key pair — this is used by Workato to identify a particular agent instance through a secure mutual TLS websocket connection.

In addition to these mandatory components the docker image may contain some additional artifacts. If you are using connectors which depend on 3rd party libraries, you will have to include those libraries into the image too.

OPA agent configuration file

The most important part of the agent is its config file. For testing our agents we will use the following config.yml:

employees_db:

adapter: mysql

host: ${MYSQL_HOST}

port: ${MYSQL_PORT}

database: ${MYSQL_DATABASE}

username: ${MYSQL_USER}

password: ${MYSQL_PASSWORD}

You may notice that this file contains references to environment variables MYSQL_* which will be defined below. The contents of an OPA config file depends on the applications or services you are going to connect to via that agent.

A group of OPA agents deployed for fault tolerance should have identical config files to ensure correct behavior when Workato traffic is load balanced across the group. For the full list of supported connections visit Workato On-Prem Agent Connection Profiles Documentation.

Creating an OPA group and an OPA agent

Next, we will get the archived OPA binaries. We’ll do this by creating an OPA group and an OPA agent. Be sure to select Linux OS when adding the agent.

You will have to accept an End User License Agreement every time you add a new agent. This is one reason why Workato does not currently distribute OPA as a prepackaged Docker image.

Unique OPA agent key pair

When done, you should have:

a unique key pair per agent (cert.zip)

a distributable package (workato-agent-linux-x64-X.Y.Z.tar.gz).

Copy the config.yml file from earlier and the distributable package to a working directory to create the Dockerfile.

Your working directory contains the following files (the key pair in the cert.zip file will be added as kubernetes secrets in step 3):

config.yml — configuration file

workato-agent-linux-x64-2.9.10.tar.gz — distributable package

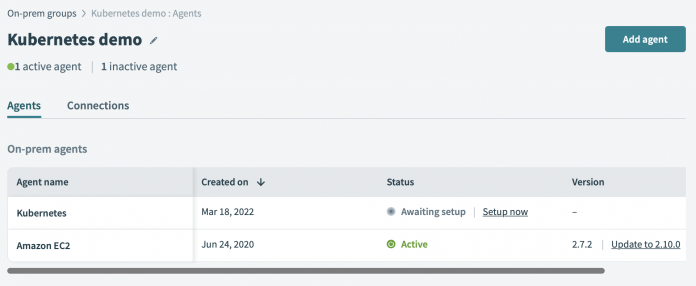

And your on-prem group should look similar to this (though without the Amazon EC2 agent):

On-prem group

In the screenshot above, we have two agents:

Amazon EC2 agent

The Amazon EC2 agent is a legacy agent which is running in a virtual machine. In your case, this should not show in your on-prem group.

Kubernetes agent

We’re going to replace the Amazon EC2 agent with a new more modern one running in a Kubernetes cluster. When the new agent has been deployed, we can remove the legacy agent. The same procedure may be used to migrate from one public cloud to another.

Create your Dockerfile

Now create a new file called Dockerfile in the working directory, and add these contents:

In our case, we do not need any 3rd party libraries, but if you do, you will need to uncomment and modify the appropriate lines in this file.

You may notice that this Dockerfile points to certificate and private key files that are not copied from the working directory by the Dockerfile. Again, we will introduce them as Kubernetes secrets in step 3.

For now, it’s enough to know that the config.yml expects certain environment variables and the Dockerfile points to a specific key pair identifying our OPA agent in the On-prem Group. Let’s build the image!

Building the docker image

% docker image build –tag 123456789012.dkr.ecr.us-west-1.amazonaws.com/workato-agent:2.9.10-r1 .

This command should be run inside your working directory. Don’t forget the dot at the end which references the current directory. Also, the fully qualified name of the image will differ based on the name of your registry that may already exist or that you might create below.

If the image builds successfully, you should see it in the list of available images:

% docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

123456789012.dkr.ecr.us-west-1.amazonaws.com/workato-agent 2.9.10-r1 56dbf7a9ca3d 2 hours ago 523MB

Congratulations! Now you have an OPA Docker image

Step 2. Push the Docker image to a registry

The tools and commands we will use to push our image to a registry and deploy it to Kubernetes may be different for different cloud providers. In the following sections, we show the appropriate tools and commands for AWS ECR/EKS and Azure ACR/AKS.

Pushing to Amazon Elastic Container Registry (ECR)

First, make sure you have installed the AWS CLI and have logged into your account from the command line. For example:

% aws ecr get-login-password –region us-west-1 | docker login –username AWS –password-stdin 123456789012.dkr.ecr.us-west-1.amazonaws.com

Next, we can create the registry for our agent (in case you have not done so yet). For example:

% aws ecr create-repository –repository-name workato-agent –image-scanning-configuration scanOnPush=true

REPOSITORY 1644982532.0 MUTABLE 123456789012 arn:aws:ecr:us-west-1:123456789012:repository/workato-agent workato-agent 123456789012.dkr.ecr.us-west-1.amazonaws.com/workato-agent

ENCRYPTIONCONFIGURATION AES256

IMAGESCANNINGCONFIGURATION True

You can refer to the detailed instructions provided in the AWS Console for how to push an image to your ECR registry (available under the “View push command” button in the list of repositories in ECR). For example, we will push our image to the ECR registry:

% docker push 123456789012.dkr.ecr.us-west-1.amazonaws.com/workato-agent:2.9.10-r1

Every time Kubernetes starts a Workato OPA container instance, it will pull this image from the registry and run it in the cluster.

Pushing to Azure Container Registry

In order to push anything to Microsoft Azure cloud we need to get Azure CLI and login:

az login

az acr login –name myregistry

% docker login myregistry.azurecr.io

In Microsoft Azure, the repository can be created automatically upon deployment:

% docker image build –tag myregistry.azurecr.io/workato-agent:2.9.10-r1 .

% docker push myregistry.azurecr.io/workato-agent:2.9.10-r1

Step 3. Deploy the Docker image from the registry to Kubernetes

Now our OPA Docker image is ready to be pulled from the registry and deployed to our Kubernetes cluster.

Deploy docker image to Amazon EKS

First, let’s just check that we are connected to the right cluster:

% aws eks update-kubeconfig –region us-west-1 –name north-california

Added new context arn:aws:eks:us-west-1:123456789012:cluster/north-california to ~/.kube/config

% kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.100.0.1 443/TCP 3h43m

% kubectl config current-context

arn:aws:eks:us-west-1:123456789012:cluster/north-california

Next, we recommend creating a separate namespace to keep production and staging namespaces isolated:

% kubectl create namespace prod

namespace/prod created

Earlier in this article we downloaded key pair and now it’s time to put it in place by creating a Kubernetes secret like this:

% kubectl create secret tls workato-agent-keypair –cert=north-california/cert.pem –key=north-california/cert.key –namespace=prod

secret/workato-agent-keypair created

You may remember that our config.yml referenced environment variables for connection properties. We should pass these now into our OPA container using a ConfigMap in Kubernetes. Create a file `mysql-prod.env` like this:

MYSQL_HOST=mysql-98ccbdf8-zztwh

MYSQL_PORT=3306

MYSQL_DATABASE=employees

Then import that data into Kubernetes:

% kubectl create configmap employee-db –from-env-file mysql-prod.env –namespace=prod

configmap/employee-db created

The access credentials for the database should be saved in a different way to prevent exposure in the Kubernetes environment:

% kubectl create secret generic employee-db

–from-literal=MYSQL_USER=admin

–from-literal=MYSQL_PASSWORD=’S!B*d$zDsb=’

–namespace=prod

secret/employee-db created

That’s it. Our cluster now has a prod namespace ready to run Workato on-prem agent image. To make it happen we just need to tell Kubernetes that this image will use the secrets and ConfigMap we’ve just created.

For this purpose, the cluster expects us to provide a deployment descriptor. You can download it here:

This descriptor does not contain exact information about any particular database, so we can use the same descriptor to run the Docker image in any environment. However, you will have to update the descriptor with the fully qualified name of the image in your registry:

spec:

containers:

– name: workato-agent

image: <fully qualified name of your image in your registry>

volumeMounts:

And we need is to specify the namespace in the command line:

% kubectl apply -f workato-agent.yaml –namespace=prod

deployment.apps/workato-agent created

Now you can verify that Kubernetes pod was started:

% kubectl get pods –namespace=prod

NAME READY STATUS RESTARTS AGE

workato-agent-7c6dfcf89b-5ll7c 1/1 Running 0 113m

Here you can see that the Kubernetes pod is running one of one containers (or replicas). That’s because the deployment descriptor specifies one replica. However, that can be overridden manually, or otherwise, to scale the number of running containers in Kubernetes. That should be avoided, because scaling the containers will lead to duplicate key conflicts between OPA agents.

You can look at the OPA agents log in Kubernetes like so:

% kubectl logs workato-agent-7c6dfcf89b-5ll7c

2022-02-17 03:06:30.070Z [main] INFO com.workato.agent.Config – [JRE] Oracle Corporation version 11.0.12

2022-02-17 03:06:30.074Z [main] INFO com.workato.agent.Config – [OS] Linux amd64 5.4.176-91.338.amzn2.x86_64

2022-02-17 03:06:31.562Z [main] INFO c.w.agent.Application – Agent version: 2.10 CN: 3ab29a47f0d287a9ef6f90b703dbf4c1:12055

2022-02-17 03:06:32.317Z [main] INFO c.w.a.sap.WebhookNotifier – HTTP nofification connectTimeout=10000, connectionRequestTimeout=10000, socketTimeout=10000

2022-02-17 03:06:32.721Z [main] INFO o.h.v.i.util.Version – HV000001: Hibernate Validator 6.0.7.Final

2022-02-17 03:06:32.987Z [main] INFO c.w.agent.http.SapService – Service starting…

2022-02-17 03:06:33.531Z [main] INFO o.a.c.h.Http11Nio2Protocol – Initializing ProtocolHandler [“https-jsse-nio2-127.0.0.1-3000”]

2022-02-17 03:06:33.820Z [main] INFO o.a.c.h.Http11Nio2Protocol – Starting ProtocolHandler [“https-jsse-nio2-127.0.0.1-3000”]

2022-02-17 03:06:33.822Z [main] INFO c.w.agent.http.Server – Connector started on /127.0.0.1:3000

2022-02-17 03:06:34.807Z [main] INFO c.workato.agent.net.Agent – Gateway ping successful: sg1.workato.com:443 version dev

2022-02-17 03:06:35.435Z [main] INFO c.workato.agent.net.Agent – Gateway ping successful: sg2.workato.com:443 version dev

2022-02-17 03:06:35.753Z [agent-control-thread] INFO c.workato.agent.net.Agent – Connected to gateway sg1.workato.com.

2022-02-17 03:06:35.838Z [agent-control-thread] INFO c.workato.agent.net.Agent – Connected to gateway sg2.workato.com.

OPA outputs its logs to stdout and stderr as recommended by the Twelve-Factor App manifesto which provides the gold standard for architecting modern applications.

This also makes it easy to ship the OPA container logs to a centralized log aggregation system that is automatically monitored. Amazon CloudWatch or Azure Monitor can help with this, and other vendors have similar tools.

Deploy docker image to Azure Kubernetes Services

Deploying to Azure AKS is similar. However, initializing kubectl is different. You can find particular instructions for your cluster at:

Azure Portal > Kubernetes services > <your cluster> > Overview > Connect

They should look like that:

% az account set –subscription 00000000-0000-0000-0000-000000000000

% az aks get-credentials –resource-group PROD –name virginia

After command line tools are initialized, we can switch between clusters

% kubectl config use-context virginia

And after that, the procedure is completely similar to what we did above at AWS.

Step 4: End-to-end testing

Now, when setup is done, let’s test if it works. It’s likely that you completed adding your agents without testing them earlier in this document. You will have to step through the setup up wizard again by clicking the “setup now” link for the agent in its OPA group in Workato.

You should be able to click the Next button on until you are on the last step of the wizard, Step 7 Test, where you will click the Test agent button.

Testing the OPA agent

If the OPA log that we looked at above says the agent connected to the gateway, your test results should look like this:

Testing OPA agent — successful

And the list of OPAs in on-prem group should look something like this:

Verify that the OPA agent has access to the data in your Kubernetes cluster

OK, but does it have access to the Employees sample MySQL database in the cluster? We need to create a recipe to verify that.

The recipe is super-simple and contains a timer trigger and MySQL / Select rows action.

When configuring the MySQL connection in the recipe, you’ll want to select the name of your on-prem group in the “Is this app in a private network?” connection property. This tells that connection to connect through OPA agents in the group.

Select name of on-prem group in the “Is this app in a private network?” connection property.

While editing the MySQL action, the connector should be able to populate the list of database tables for you. This already tells you that the connector was able to successfully connect to your database through OPA to discover those tables. In case anything goes wrong, you should check agent’s log as it is described above for additional information about errors.

Populated database

The post How to deploy Workato OPA as a microservice on Kubernetes appeared first on Workato Product Hub.

Read MoreWorkato Product Hub