This is a guest post co-authored by Berkay Berkman, Yağız Yanıkoğlu, Mutlu Polatcan, Mahmut Turan, Umut Cemal Kıraç from Getir.

Getir is an ultrafast delivery pioneer that revolutionized last-mile delivery in 2015 with its 10-minute grocery delivery proposition. Getir’s story started in Istanbul, and they launched operations in the UK, Netherlands, Germany, France, Spain, Italy, Portugal, and the US in 2021. Getir has launched seven products since inception: GetirFood, GetirMore, GetirWater, GetirLocals, Getirbitaksi (taxi service), Getirdrive (car rental service), and GetirJobs (recruitment).

In this post, we explain how Getir built an end-to-end fraud detection system, from gathering real-time data to detecting fraudulent activities using Amazon Neptune and Amazon DynamoDB to performing loss prevention calculations, observing customer behavior, and giving insight into risky accounts.

Neptune is a fully managed database service built for the cloud that makes it simple to build and run graph applications. Neptune provides built-in security, continuous backups, serverless compute, and integrations with other AWS services.

DynamoDB is a fast and flexible non-relational database service for any scale. DynamoDB enables you to offload the administrative burdens of operating and scaling distributed databases to AWS so that you don’t have to worry about hardware provisioning, setup and configuration, throughput capacity planning, replication, software patching, or cluster scaling.

Overview of solution

In this project, five people from the data and business teams worked together: Three from the data team and two from the business audit team. The project itself was completed in 3 months, but the data analysis (detection of connected customers, determination of rule sets) took additional 3 months.

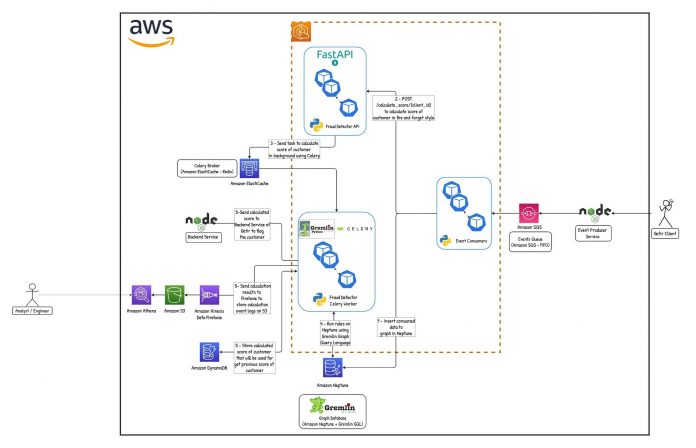

The following diagram shows the architecture of the solution.

Solution details

We needed to get real-time data from the Getir clients. For this, we needed a service that would provide us with real-time data and ensure that this data reaches us in a fully managed manner. We chose Amazon Simple Queue Service (Amazon SQS) because it’s cost efficient and fully managed, and provides FIFO management. For example, when a new user is created, different attributes of the user (device information, address, and more) need to be fed to the SQS queue so that the fraud detector works more properly. As soon as any data is added to the event queue (such as adding a new payment method), services send real-time data to this queue. The use of VPC endpoints is also important in terms of our network cost management.

Our consumers running on Amazon Elastic Kubernetes Service (Amazon EKS) receive data from SQS message queues and insert data to the Neptune database. The high write capacity of Neptune allows real-time data inserts. The fact that the reader and writer instances are separate also gives us the opportunity to read while writing and do not affect each other. This way, we keep our graph tree in Neptune updated in real time.

When a user logs in with new information or a new attribute, the consumer writes this new information or attribute details to Neptune in milliseconds and sends it to the data graph tree immediately.

One of the great advantages of using Amazon Neptune is the ability to use the Neptune Notebook feature to visualize and analyze our graph data. This feature makes it easy to explore our data and extract insights from it in a more intuitive way.

With the Neptune Notebook, we can use Jupyter notebooks to write code and create visualizations that allow us to interact with our graph data in real-time. This can be particularly useful when trying to identify patterns and relationships between entities, as it allows us to explore the data from multiple angles and gain a deeper understanding of the underlying structures. In addition to its interactive visualization capabilities, the Neptune Notebook also offers a range of other features that make it an ideal tool for data analysis. For example, it provides a range of pre-built templates and sample code snippets that can be used to quickly get up and running with our analysis.

After the real-time data is inserted to the database, we send it to the Calculator API. The actual score calculation is done in the calculator section. The connections between the nodes of the fraud circles can be very large and the calculations can take a long time, the API sends these calculations to the Celery workers, which are the task queues. The Celery workers need a key-value storage to retrieve tasks. We use Amazon ElastiCache for Redis to achieve this. Celery workers also assign a score as a result of various rule-based calculations made according to the nature and density of connections between nodes. The calculation and analysis that we perform on Neptune based on the user’s linked attributes allows us to extract the user’s data tree. With Gremlin (graph traversal language) supported by Neptune, instead of queries written with hundreds of rows in classical relational databases, we can reach our target data in a very short time and with straightforward queries. On the data modeling side, one of the most important aspects of our real-time work is to set up a structure where we can reach the data by scanning less than 1000 vertices.

These assigned user scores are stored in Amazon DynamoDB. DynamoDB is very efficient for scenarios where we need quick answers with cache logic. Scoring is made with connections established over attributes that represent the user. Scoring makes it easier to detect fraud circles / data trees.

We send the log records to Amazon Simple Storage Service (Amazon S3) with Amazon Kinesis Data Firehose. We can analyze these logs by using Amazon Athena. It’s important to keep these logs because information such as when we read the incoming message and how long the calculation takes is important for monitoring and increasing the efficiency of the scoring process. We have observed that during peak times, our record processing rate reaches as high as 20,000 records per minute. This corresponds to about 9 MB per minute. From the debugging step to KPIs, loss prevention calculations, observing the behavior of the customer, and getting an idea about risky accounts, the solution provides us with data that allows us to perform detailed analysis.

Conclusion

Neptune has allowed us to use graph databases easily and efficiently within the team and has improved our capabilities when working with real-time data. DynamoDB was the first service we tried and it worked very well. In the data modeling part, using DynamoDB as the key-value data store prevented Neptune from slowing down day by day. We were able to optimize Neptune scaling and achieve significant cost savings, reducing our expenses. Specifically, we saved a substantial majority of our previous costs through our optimization efforts. The use of DynamoDB in high traffic has proven its power to us and has become our preferred service in more projects due to its low cost and easy usage.

Our multi-layered approach combines data analysis, user verification, fraud scoring, and machine learning. By using these techniques in combination, we can better protect ourselves and our customers from fraudulent activity by 95%, providing cost savings in marketing as well.

For more information about how to get started building your own fraud detection system using Neptune, you can explore more on Getting Started with Amazon Neptune and Amazon Neptune Resources.

For additional resources on DynamoDB, you can learn more on Getting Started with Amazon DynamoDB.

About the Authors

Berkay Berkman, who holds a BSc in Computer Engineering, has experience in both data science and engineering. He has been working for Getir on the Data Platform and Engineering team as a Senior Data Engineer. Berkay enjoys working on data problems and creating solutions with cloud-native platforms.

Yağız Yanıkoğlu has more than 13 years of experience in Software Development Lifecycle. He started his career working as Backend Developer and met with AWS in 2015. Since then, his main goal is to create secure, scalable, fault-tolerant and cost-optimized cloud solutions. He joined Getir, the pioneer of ultrafast grocery delivery, in 2020 and currently works as Senior Data Engineering & Platform Manager. His team is responsible for designing, implementing and maintaining end-to-end data platform solutions for Getir.

Mutlu Polatcan is a Staff Data Engineer at Getir, specialized in design and build cloud-native data platforms. He loves combining open-source projects with cloud services.

Mahmut Turan, BA in Economics and LLB in Law, held various technical and business roles and also have extensive experience in the financial services industry in both regulated and regulatory sides, q-commerce, and technology. For more than 2 years, he has working for Getir holding various roles; internal audit manager (data & analytics), audit analytics and fraud prevention manager, and head of fraud analytics and loss prevention. His expertise is internal audit, audit analytics, data science, credit risk, and fraud risk.

Umut Cemal Kıraç is a Fraud Analytics & Prevention Manager at Getir. He is responsible for management of company-wide fraud risks as well as audit-analytics function of Getir. He is also designated with CIA (Certified Internal Auditor) and CISA (Certified Information Systems Auditor) certifications. His area of specialty is delivering analytical solutions for business risks.

Esra Kayabalı is a Senior Solutions Architect at AWS, specializing in the analytics domain including data warehousing, data lakes, big data analytics, batch and real-time data streaming and data integration. She has 12 years of software development and architecture experience. She is passionate about learning and teaching cloud technologies.

Read MoreAWS Database Blog