This is a guest blog post co-written with Hussain Jagirdar from Games24x7.

Games24x7 is one of India’s most valuable multi-game platforms and entertains over 100 million gamers across various skill games. With “Science of Gaming” as their core philosophy, they have enabled a vision of end-to-end informatics around game dynamics, game platforms, and players by consolidating orthogonal research directions of game AI, game data science, and game user research. The AI and data science team dive into a plethora of multi-dimensional data and run a variety of use cases like player journey optimization, game action detection, hyper-personalization, customer 360, and more on AWS.

Games24x7 employs an automated, data-driven, AI powered framework for the assessment of each player’s behavior through interactions on the platform and flags users with anomalous behavior. They’ve built a deep-learning model ScarceGAN, which focuses on identification of extremely rare or scarce samples from multi-dimensional longitudinal telemetry data with small and weak labels. This work has been published in CIKM’21 and is open source for rare class identification for any longitudinal telemetry data. The need for productionization and adoption of the model was paramount to create a backbone behind enabling responsible game play in their platform, where the flagged users can be taken through a different journey of moderation and control.

In this post, we share how Games24x7 improved their training pipelines for their responsible gaming platform using Amazon SageMaker.

Customer challenges

The DS/AI team at Games24x7 used multiple services provided by AWS, including SageMaker notebooks, AWS Step Functions, AWS Lambda, and Amazon EMR, for building pipelines for various use cases. To handle the drift in data distribution, and therefore to retrain their ScarceGAN model, they discovered that the existing system needed a better MLOps solution.

In the previous pipeline through Step Functions, a single monolith codebase ran data preprocessing, retraining, and evaluation. This became a bottleneck in troubleshooting, adding, or removing a step, or even in making some small changes in the overall infrastructure. This step-function instantiated a cluster of instances to extract and process data from S3 and the further steps of pre-processing, training, evaluation would run on a single large EC2 instance. In scenarios where the pipeline failed at any step the whole workflow needed to be restarted from the beginning, which resulted in repeated runs and increased cost. All the training and evaluation metrics were inspected manually from Amazon Simple Storage Service (Amazon S3). There was no mechanism to pass and store the metadata of the multiple experiments done on the model. Due to the decentralized model monitoring, thorough investigation and cherry-picking the best model required hours from the data science team. Accumulation of all these efforts had resulted in lower team productivity and increased overhead. Additionally, with a fast-growing team, it was very challenging to share this knowledge across the team.

Because MLOps concepts are very extensive and implementing all the steps would need time, we decided that in the first stage we would address the following core issues:

A secure, controlled, and templatized environment to retrain our in-house deep learning model using industry best practices

A parameterized training environment to send a different set of parameters for each retraining job and audit the last-runs

The ability to visually track training metrics and evaluation metrics, and have metadata to track and compare experiments

The ability to scale each step individually and reuse the previous steps in cases of step failures

A single dedicated environment to register models, store features, and invoke inferencing pipelines

A modern toolset that could minimize compute requirements, drive down costs, and drive sustainable ML development and operations by incorporating the flexibility of using different instances for different steps

Creating a benchmark template of state-of-the-art MLOps pipeline that could be used across various data science teams

Games24x7 started evaluating other solutions, including Amazon SageMaker Studio Pipelines. The already existing solution through Step Functions had limitations. Studio pipelines had the flexibility of adding or removing a step at any point of time. Also, the overall architecture and their data dependencies between each step can be visualized through DAGs. The evaluation and fine-tuning of the retraining steps became quite efficient after we adopted different Amazon SageMaker functionalities such as the Amazon SageMaker Studio, Pipelines, Processing, Training, model registry and experiments and trials. The AWS Solution Architecture team showed great deep dive and was really instrumental in the design and implementation of this solution.

Solution overview

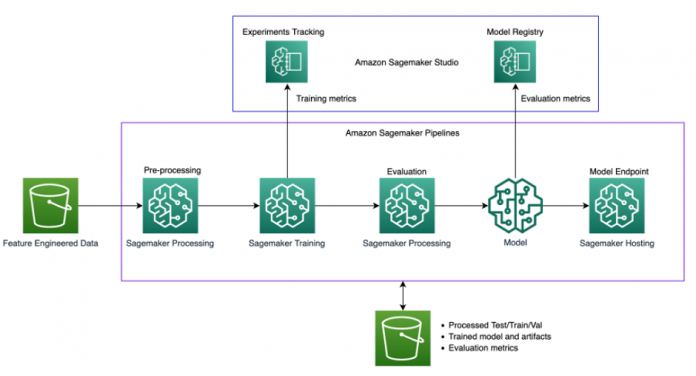

The following diagram illustrates the solution architecture.

The solution uses a SageMaker Studio environment to run the retraining experiments. The code to invoke the pipeline script is available in the Studio notebooks, and we can change the hyperparameters and input/output when invoking the pipeline. This is quite different from our earlier method where we had all the parameters hard coded within the scripts and all the processes were inextricably linked. This required modularization of the monolithic code into different steps.

The following diagram illustrates our original monolithic process.

Modularization

In order to scale, track, and run each step individually, the monolithic code needed to be modularized. Parameters, data, and code dependencies between each step were removed, and shared modules for the shared components across the steps was created. An illustration of the modularization is shown below:-

For every single module , testing was done locally using SageMaker SDK’s Script mode for training, processing and evaluation which required minor changes in the code to run with SageMaker. The local mode testing for deep learning scripts can be done either on SageMaker notebooks if already being used or by using Local Mode using SageMaker Pipelines in case of directly starting with Pipelines. This helps in validating if our custom scripts will run on SageMaker instances.

Each module was then tested in isolation using SageMaker Training/processing SDK’s using Script mode and ran them in a sequence manually using the SageMaker instances for each step like below training step:

Amazon S3 was used to get the source data to process and then store the intermediate data, data frames, and NumPy results back to Amazon S3 for the next step. After the integration testing between individual modules for pre-processing, training, evaluation was complete, the SageMaker Pipeline SDK’s which is integrated with the SageMaker Python SDK’s that we already used in the above steps, allowed us to chain all these modules programmatically by passing the input parameters, data, metadata and output of each step as an input to the next steps.

We could re-use the previous Sagemaker Python SDK code to run the modules individually into Sagemaker Pipeline SDK based runs. The relationships between each steps of the pipeline are determined by the data dependencies between steps.

The final steps of the pipeline are as follows:

Data preprocessing

Retraining

Evaluation

Model registration

In the following sections, we discuss each of the steps in more detail when run with the SageMaker Pipeline SDK’s.

Data preprocessing

This step transforms the raw input data and preprocesses and splits into train, validation, and test sets. For this processing step, we instantiated a SageMaker processing job with TensorFlow Framework Processor, which takes our script, copies the data from Amazon S3, and then pulls a Docker image provided and maintained by SageMaker. This Docker container allowed us to pass our library dependencies in the requirements.txt file while having all the TensorFlow libraries already included, and pass the path for source_dir for the script. The train and validation data goes to the training step, and the test data gets forwarded to the evaluation step. The best part of using this container was that it allowed us to pass a variety of inputs and outputs as different S3 locations, which could then be passed as a step dependency to the next steps in the SageMaker pipeline.

Retraining

We wrapped the training module through the SageMaker Pipelines TrainingStep API and used already available deep learning container images through the TensorFlow Framework estimator (also known as Script mode) for SageMaker training. Script mode allowed us to have minimal changes in our training code, and the SageMaker pre-built Docker container handles the Python, Framework versions, and so on. The ProcessingOutputs from the Data_Preprocessing step were forwarded as the TrainingInput of this step.

All the hyperparameters were passed through the estimator through a JSON file. For every epoch in our training, we were already sending our training metrics through stdOut in the script. Because we wanted to track the metrics of an ongoing training job and compare them with previous training jobs, we just had to parse this StdOut by defining the metric definitions through regex to fetch the metrics from StdOut for every epoch.

It was interesting to understand that SageMaker Pipelines automatically integrates with SageMaker Experiments API, which by default creates an experiment, trial, and trial component for every run. This allows us to compare training metrics like accuracy and precision across multiple runs as shown below.

For each training job run, we generate four different models to Amazon S3 based on our custom business definition.

Evaluation

This step loads the trained models from Amazon S3 and evaluates on our custom metrics. This ProcessingStep takes the model and the test data as its input and dumps the reports of the model performance on Amazon S3.

We’re using custom metrics, so in order to register these custom metrics to the model registry, we needed to convert the schema of the evaluation metrics stored in Amazon S3 as CSV to the SageMaker Model quality JSON output. Then we can register the location of this evaluation JSON metrics to the model registry.

The following screenshots show an example of how we converted a CSV to Sagemaker Model quality JSON format.

Model registration

As mentioned earlier, we were creating multiple models in a single training step, so we had to use a SageMaker Pipelines Lambda integration to register all four models into a model registry. For a single model registration we can use the ModelStep API to create a SageMaker model in registry. For each model, the Lambda function retrieves the model artifact and evaluation metric from Amazon S3 and creates a model package to a specific ARN, so that all four models can be registered into a single model registry. The SageMaker Python APIs also allowed us to send custom metadata that we wanted to pass to select the best models. This proved to be a major milestone for productivity because all the models can now be compared and audited from a single window. We provided metadata to uniquely distinguish the model from each other. This also helped in approving a single model with the help of peer-reviews and management reviews based on model metrics.

The above code block shows an example of how we added metadata through model package input to the model registry along with the model metrics.

The screenshot below shows how easily we can compare metrics of different model versions once they are registered.

Pipeline Invocation

The pipeline can be invoked through EventBridge , Sagemaker Studio or the SDK itself. The invocation runs the jobs based on the data dependencies between steps.

Conclusion

In this post, we demonstrated how Games24x7 transformed their MLOps assets through SageMaker pipelines. The ability to visually track training metrics and evaluation metrics, with parameterized environment, scaling the steps individually with the right processing platform and a central model registry proved to be a major milestone in standardizing and advancing to an auditable, reusable, efficient, and explainable workflow. This project is a blueprint across different data science teams and has increased the overall productivity by allowing members to operate, manage, and collaborate with best practices.

If you have a similar use case and want to get started then we would recommend to go through SageMaker Script mode and the SageMaker end to end examples using Sagemaker Studio. These examples have the technical details which has been covered in this blog.

A modern data strategy gives you a comprehensive plan to manage, access, analyze, and act on data. AWS provides the most complete set of services for the entire end-to-end data journey for all workloads, all types of data and all desired business outcomes. In turn, this makes AWS the best place to unlock value from your data and turn it into insight.

About the Authors

Hussain Jagirdar is a Senior Scientist – Applied Research at Games24x7. He is currently involved in research efforts in the area of explainable AI and deep learning. His recent work has involved deep generative modeling, time-series modeling, and related subareas of machine learning and AI. He is also passionate about MLOps and standardizing projects that demand constraints such as scalability, reliability, and sensitivity.

Sumir Kumar is a Solutions Architect at AWS and has over 13 years of experience in technology industry. At AWS, he works closely with key AWS customers to design and implement cloud based solutions that solve complex business problems. He is very passionate about data analytics and machine learning and has a proven track record of helping organizations unlock the full potential of their data using AWS Cloud.

Read MoreAWS Machine Learning Blog