Certain organizations require multi-Region redundancy for their workloads to achieve disaster recovery and business continuity. Disaster recovery is an important part of resiliency strategy and concerns how a workload responds when a disaster strikes. The most common pattern to have as a disaster recovery solution in AWS is to build a multi-Region application architecture including a backend data store. Cross-Region failover for typical online transaction processing (OLTP) databases often require a clear strategy, resources, and manual actions related to switching database endpoints. Managing all these tasks can be challenging to the overall disaster recovery solution.

Amazon Aurora is a MySQL and PostgreSQL-compatible relational database that combines the performance and availability of traditional enterprise databases with the simplicity and cost-effectiveness of open-source databases. Aurora Global Database lets you use a primary Region where your data resides and one or more read-only secondary Regions where data is replicated. Aurora Global Database has a feature of managed planned failover with zero data loss and it maintains the topology of cluster.

You can use this feature to test and validate your disaster recovery strategy. In case of a real disaster, the Aurora cluster in the secondary Region needs to be promoted to have write capabilities. To do that, it needs to be removed from the global database cluster, and the secondary Region’s cluster becomes standalone. As the secondary Region’s cluster becomes available, we get new reader and writer endpoints. These endpoints need to be changed in the application configuration to point to the correct database. All of these tasks can be error-prone and time-consuming if done manually.

In this post, we provide an approach for scripting your Aurora failover tasks and switching database endpoints after failover.

Prerequisites

To implement this solution, you need the following:

An application accessing Aurora read and write capabilities, configured in the primary and secondary Regions.

An Aurora cluster (with Aurora Global Database) running in the primary and secondary Regions. For instructions, see Fast cross-region disaster recovery and low-latency global reads.

Solution overview

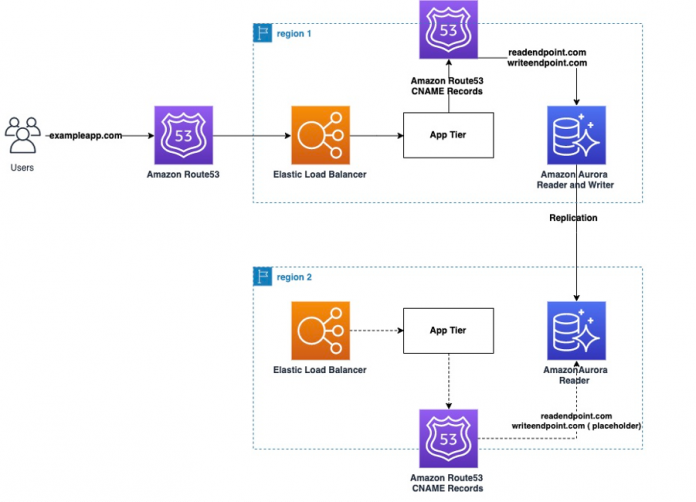

This solution uses Amazon Route 53 CNAME records to store Aurora reader and writer endpoints. The application connects to the database using these records. We use an AWS Lambda function to run manual tasks—it promotes the secondary Region’s Aurora cluster, waits until the cluster becomes available, and updates the CNAME values with these endpoints in Route 53.

The following diagram shows the architecture of this solution. First, the two different Route 53 CNAME records are configured and associated with the reader and writer Aurora database endpoints. The consuming application uses those endpoints to call the Aurora database, depending on the read or write operation.

The application is deployed in multi-Region (primary and secondary Region). Aurora Global Database uses a built-in replication feature to replicate data from the primary Region to the secondary Region. The secondary Region’s DB instance is read-only and can be used to scale the read operations for your applications by connecting to the reader endpoint of the cluster.

Implement the solution

To set up this solution, complete the following steps:

On the Amazon Relational Database Service (Amazon RDS) console, capture the current state of the Aurora cluster and make a note of Aurora read and write endpoints.

Next, we create our Route 53 records.

On the Route 53 console, create a private hosted zone.

Route 53 will route traffic internally within the VPC, that’s why first create a private hosted zone in Route 53.

As part of creating the private hosted zone, you have to associate one or more VPCs to that hosted zone.

Because this architecture is using two Regions, associate both VPCs to the private hosted zone.

Now create records in the newly created zone.

For this post, create two CNAME records, one for the writer endpoint and one for the reader endpoint (which we obtained earlier) so that the application can segregate the reader and writer traffic. Choose a simple routing policy.The following screenshot shows the reader endpoint pointing to the primary Region.

The following screenshot shows the writer endpoint pointing to the primary Region.

Your private hosted zone looks like the following screenshot when you’re done with CNAME creation.

Now update the application configuration to use the preceding CNAME records as the database host name in the connection string. This ensures that the application code isn’t directly using the Aurora reader and writer endpoints, therefore reducing application changes when these endpoints change in case of failover. If your application is configured to perform host name identity verification, this option may not work. For more information, see prerequisites.

Update the configuration with the following code:

Now script the failover process in the Lambda function. This function promotes the secondary Region with write capabilities, makes sure database is available, and updates the Route 53 CNAME records to point to the newly created endpoints from the secondary Region.

This function uses Lambda environment variables for GLOBAL_CLUSTER_NAME, SECONDARY_CLUSTER_ARN, and HOSTED_ZONE_ID. These values are available on the Lambda console.

Create your Lambda function with the following code. For instructions on creating a function, see the AWS Lambda Developer Guide.

Invoke the function from the Lambda console or configure it to trigger based on an event.

You can also invoke the Lambda function from Amazon API Gateway, which provides an “easy button” approach for failover.

After the Lambda function runs, the secondary Aurora cluster gets promoted with write capability and the Route 53 CNAME records get updated with the latest database endpoints.

The following screenshot shows what the new endpoints look like in the secondary Region.

The Route 53 CNAME records now have the updated endpoint values.

Clean up

To delete the resources created to implement this solution, complete the following steps:

Delete the CNAME records and private hosted zone you created.

Change the application configuration to its original state.

Delete the Lambda function you created.

Summary

This post showed how to minimize the challenges faced in multi-Region application implementation. Route 53 CNAME records take care of pointing to the appropriate database endpoints. It gives a uniform common endpoint for application to use and application configuration doesn’t need to change at all. We use a Lambda function to script the manual tasks required for Aurora global database failover. The function can be triggered through an event or with a click of button.

This solution removes the heavy lifting needed to perform and monitor the failover tasks, thereby reducing the risk and human errors. You can expand the solution for increasingly complex manual tasks.

For more information about Aurora global databases, see Using Amazon Aurora global databases.

About the authors

Jigna Gandhi is a Solutions Architect at Amazon Web Services, based in the Greater New York City area. She works with AWS DNB customers, helping them in applying best practices of AWS services and guiding them to implement advance workloads in AWS.

Vivek Kumar is a Solutions Architect at AWS based out of New York. He works with customers providing technical assistance and architectural guidance on various AWS services. He brings several years of experience in software engineering and architecture roles for various large-scale enterprises.

Read MoreAWS Database Blog