Sam (sysadmin) and Erin (developer) work at “Mindful Containers” , an imaginary company that sells sleeping pods for mindful breaks. One day, Sam calls Erin because her application has crashed during deployment, but it worked just fine on her workstation. They check logs, debug stuff, and eventually find version inconsistencies; the right dependencies were missing in production. Together, they perform a risky rollback. Later, they install the missing dependencies and hope nothing else breaks. Erin and Sam decide to fix the root problem once and for all using containers.

Why containers?

Containers are often compared with virtual machines (VMs). You might already be familiar with VMs: a guest operating system such as Linux or Windows runs on top of a host operating system with virtualized access to the underlying hardware. Like virtual machines, containers enable you to package your application together with libraries and other dependencies, providing isolated environments for running your software services. As you’ll see, however, the similarities end here as containers offer a far more lightweight unit for developers and IT Ops teams to work with, bringing a myriad of benefits.

Instead of virtualizing the hardware stack as with the virtual machines approach, containers virtualize at the operating system level, with multiple containers running atop the OS kernel directly. This means that containers are far more lightweight: They share the OS kernel, start much faster, and use a fraction of the memory compared to booting an entire OS.

Containers help improve portability, shareability, deployment speed, reusability, and more. More importantly to Erin and Sam, containers made it possible to solve the ‘it worked on my machine’ problem.

Why Kubernetes?

Now, it turns out that Sam is responsible for more developers than just Erin.

He struggles with rolling out software:

Will it work on all the machines? If it doesn’t work, then what?What happens if traffic spikes? (Sam decides to over-provision just in case…)

With lots of developers now containerizing their apps, Sam needs a better way to orchestrate all the containers that developers ship. The solution: Kubernetes!

What is so cool about Kubernetes?

The Mindful Container team had a bunch of servers, and used to make decisions on what ran on each manually based on what they knew would conflict if it were to run on the same machine. If they were lucky, they might have some sort of scripted system for rolling out software, but it usually involved SSHing into each machine. Now with containers—and the isolation they provide—they can trust that in most cases, any two applications can fairly share the resources of the same machine.

With Kubernetes, the team can now introduce a control plane that makes decisions for them on where to run applications. And even better, it doesn’t just statically place them; it can continually monitor the state of each machine, and make adjustments to the state to ensure that what is happening is what they’ve actually specified. Kubernetes runs with a control plane, and on a number of nodes. We install a piece of software called the kubelet on each node, which reports the state back to the master.

Here is how it works:

The master controls the clusterThe worker nodes run podsA pod holds a set of containersPods are bin-packed as efficiently as configuration and hardware allowsControllers provide safeguards so that pods run according to specification (reconciliation loops)All components can be deployed in high-availability mode and spread across zones or data centers

Kubernetes orchestrates containers across a fleet of machines, with support for:

Automated deployment and replication of containersOnline scale-in and scale-out of container clustersLoad balancing over groups of containersRolling upgrades of application containersResiliency, with automated rescheduling of failed containers (i.e., self-healing of container instances)Controlled exposure of network ports to systems outside of the cluster

A few more things to know about Kubernetes:

Instead of flying a plane, you program an autopilot: Declare a desired state, and Kubernetes will make it true – and continue to keep it true.It was inspired by Google’s tools for running data centers efficiently.It has seen unprecedented community activity and is today one of the largest projects on GitHub. Google remains the top contributor.

The magic of Kubernetes starts happening when we don’t require a sysadmin to make the decisions. Instead, we enable a build and deployment pipeline. When a build succeeds, passes all tests and is signed off, it can automatically be deployed to the cluster gradually, blue/green, or immediately.

Kubernetes the hard way

By far, the single biggest obstacle to using Kubernetes (k8s) is learning how to install and manage your own cluster. Check out k8s the hard way as a step-by-step guide to install a k8s cluster. You have to think about tasks like:

Choosing a cloud provider or bare metalProvisioning machinesPicking an OS and container runtimeConfiguring networking (e.g. P ranges for pods, SDNs, LBs)Setting up security (e.g. generating certs and configuring encryption) Starting up cluster services such as DNS, logging, and monitoring

Once you have all these pieces together, you can finally start to use k8s and deploy your first application. And you’re feeling great and happy and k8s is awesome! But then, you have to roll out an update…🤦

Wouldn’t it be great if Mindful Containers could start clusters with a single click, view all their clusters and workloads in a single pane of glass, and have Google continually manage their cluster to scale it and keep it healthy?

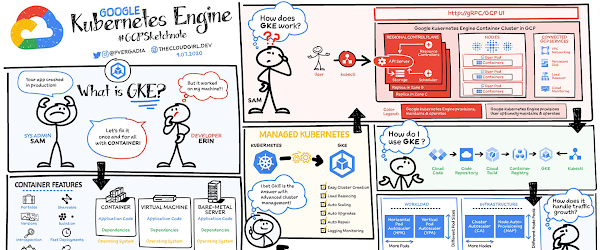

What is GKE?

Google Kubernetes Engine (GKE) is a secured and fully managed Kubernetes service. It provides an easy to use environment for deploying, managing, and scaling your containerized applications using Google infrastructure.

Mindful Containers decided to use GKE to enable development self-service by delegating release power to developers and software.

Why GKE?

Production-ready with autopilot mode of operation for hands-off experienceBest-in-class developer tooling with consistent support for first and third party toolsOffers container-native networking with a unique BeyondProd security approachMost scalable Kubernetes service: Only GKE can run 15,000 node clusters, outscaling competition upto 15X.Industry-first to provide fully-managed Kubernetes service that implements full Kubernetes API, 4-way auto-scaling, release channels and multi-cluster support

How does GKE Work?

The GKE control plane is fully operated by the Google SRE (Site Reliability Engineering) team with managed availability, security patching, and upgrades. The Google SRE team not only has deep operational knowledge of k8s, but is also uniquely positioned to get early insights on any potential issues by managing a fleet of tens of thousands of clusters. That’s something that is simply not possible to achieve with self-managed k8s. GKE also provides comprehensive management for nodes including auto-provisioning, security patching, opt-in auto upgrade, repair, and scaling. On top of that, GKE provides end-to-end container security including private and hybrid networking.

How does GKE make scaling easy?

As the demand for Mindful Containers grows, they now need to scale their services. Manually scaling a Kubernetes cluster for availability and reliability can be complex and time consuming. GKE automatically scales the number of pods and nodes based on the resource consumption of services.

Vertical Pod Autoscaler (VPA) watches resource utilization of your deployments and adjusts requested CPU and RAM to stabilize the workloads Node Auto Provisioning optimizes cluster resources with an enhanced version of Cluster Autoscaling.

In addition to the fully managed control plane that GKE offers, using the Autopilot mode of operation automatically applies industry best practices and can eliminate all node management operations, maximizing your cluster efficiency and helping to provide a stronger security posture.

Conclusion

Mindful Containers went from VMs → Containers → self-managed Kubernetes → GKE but you don’t have to follow the same difficult route. Start with GKE and save yourself the time and hassle of managing containers and Kubernetes clusters yourself. Take advantage of Google’s leadership in Kubernetes and latest innovations in developer productivity, resource efficiency, and automated operations to both accelerate your time to market and lower your infrastructure costs. So you can easily manage and solve the needs of speed, scale, security, and availability of your business.For a more in-depth look into GKE check out the documentation.

Interested in getting hands-on experience with Kubernetes? Join the Kubernetes track of our skills challenge to receive free 30 days access to labs and opportunities to earn Google Cloud skill badges.

For more #GCPSketchnote, follow the GitHub repo. For similar cloud content follow me on Twitter @pvergadia and keep an eye out on thecloudgirl.dev

Cloud BlogRead More